2025

Z. Yao, S. Liu, Y. Wang, X. Yuan, L. Fang

Nature, 2025.

Latex Bibtex Citation:

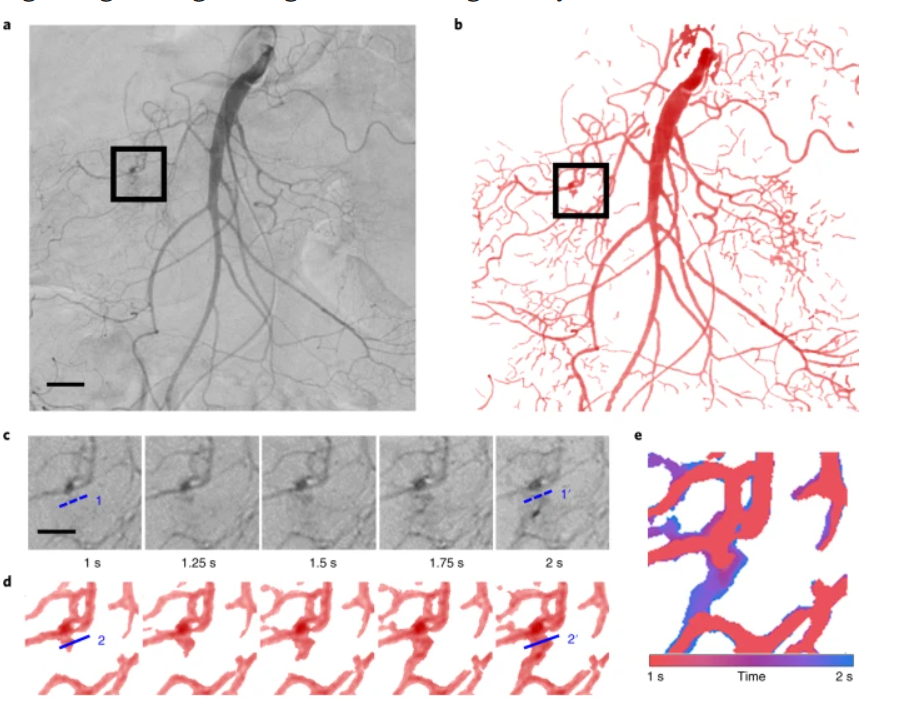

T. Zhou, Y. Jiang, Z. Xu, Z. Xue, L. Fang

Nature Communications, 2025.

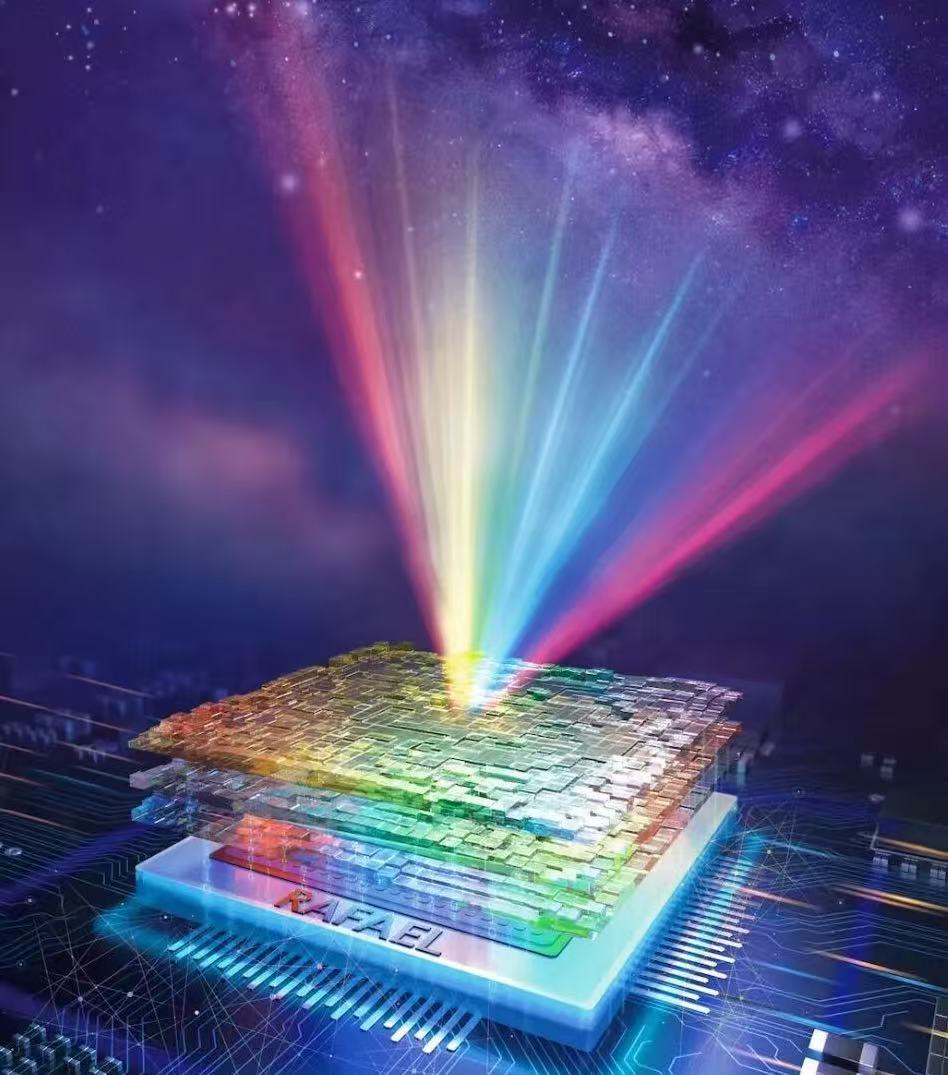

In the artificial intelligence era propelled by complex computational models, photonic computing represents a promising approach for energy-efficient machine learning; however, error accumulation inherent to the analog nature limits their depth to around ten layers, restricting advanced computing capabilities towards large language models (LLMs). In this study, we identify that such error accumulation arises from propagation redundancies. By introducing perturbations on-chip to decouple computational correlations, we eliminate the redundancy and develop deep photonic learning with a single-layer photonic computing (SLiM) chip that exhibits error tolerance. The SLiM chip overcomes the depth limitations of optical neural networks, allowing for error rates to be constrained across more than 200 layers, and extends spatial depth from millimeter to hundred-meter scale, enabling a three-dimensional chip cluster. We experimentally constructed a neural network with 100 layers for image classification, along with a 0.345-billion-parameter LLM with 384 layers for text generation, and a 0.192-billion-parameter LLM with 640 layers for image generation, all achieving performances comparable to ideal simulations at 10-GHz data rate. This error-tolerant single-layer chip initiates the advancement of state-of-the-art deep learning models on efficient analog computing hardware.

Latex Bibtex Citation:

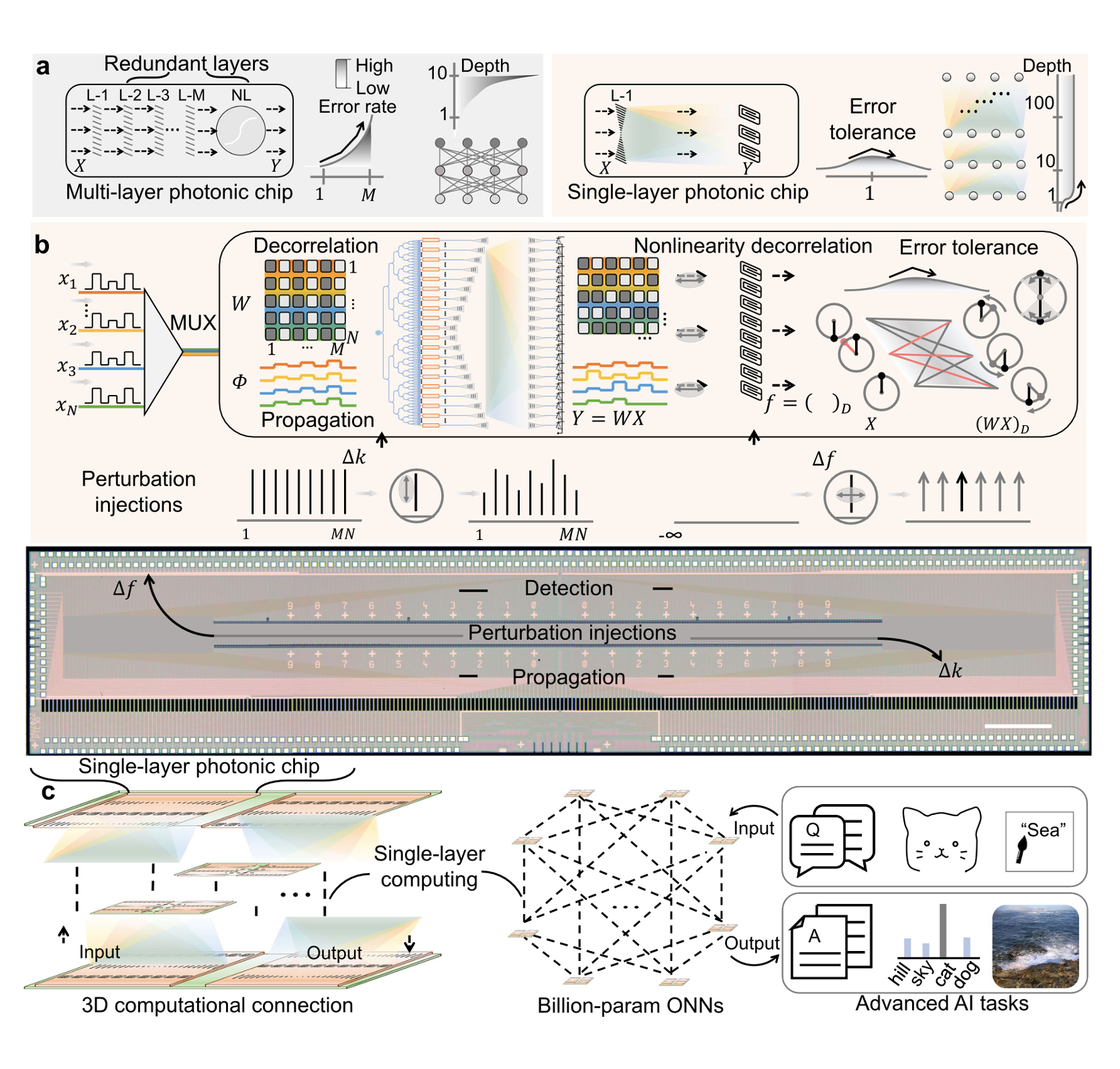

C. Wang, Y. Cheng, Z. Xu, Q. Dai, L. Fang

Nature Photonics, 2025.

Latex Bibtex Citation:

T. Yan, Y. Guo, T. Zhou, G. Shao, S. Li, R. Huang, Q. Dai and L. Fang

Nature Computational Science, 2025.

Latex Bibtex Citation:

H. Huang, X. Liang, Y. Wang, J. Tang, Y. Li, Y. Du, W. Sun, J. Zhang, P. Yao, X. Mou, F. Xu, J. Zhang, Y. Lu, Z. Liu, J. Wang, Z. Jiang, R. Hu, Z. Wang, Q. Zhang, B. Gao, X. Bai, L. Fang, Q. Dai, H. Yin, H. Qian and H. Wu,

Nature Nanotechnology, 2025.

Latex Bibtex Citation:

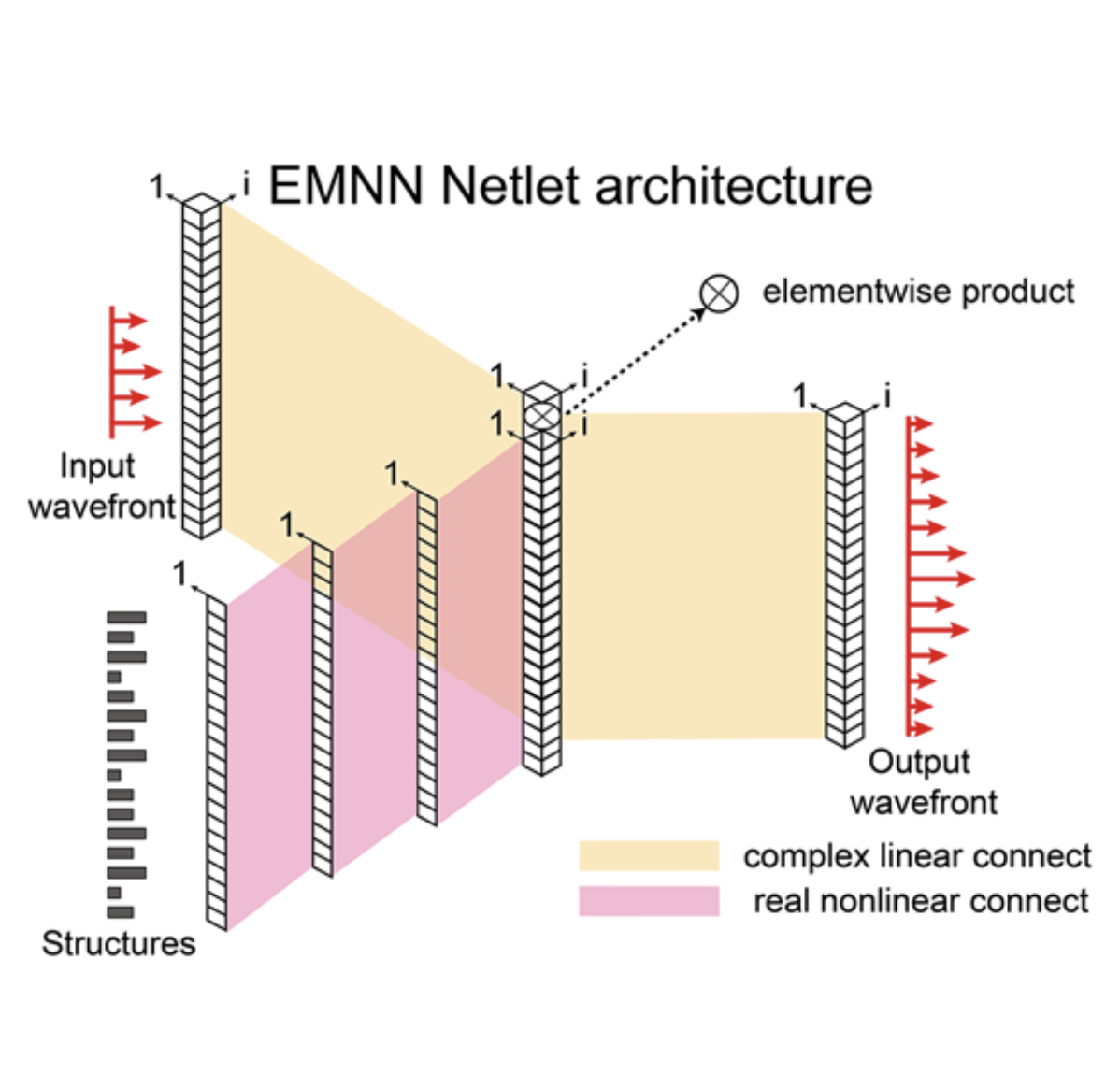

G Shao, T Zhou, T Yan, Y Guo, Y Zhao, R Huang, and L Fang,

Nanophotonics, 2025.

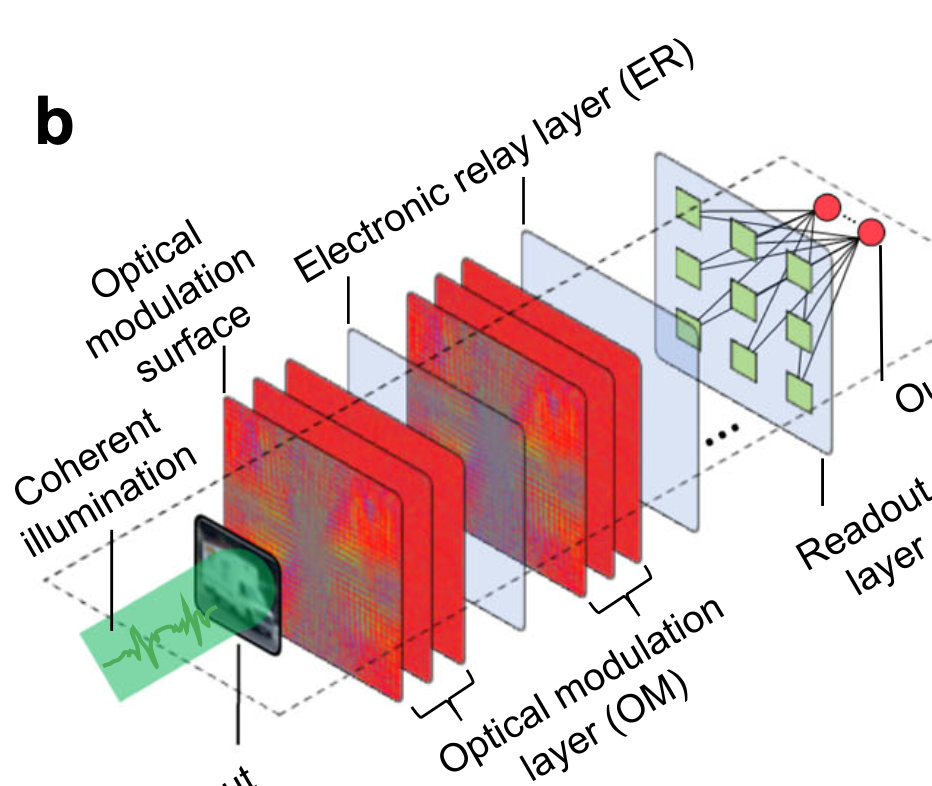

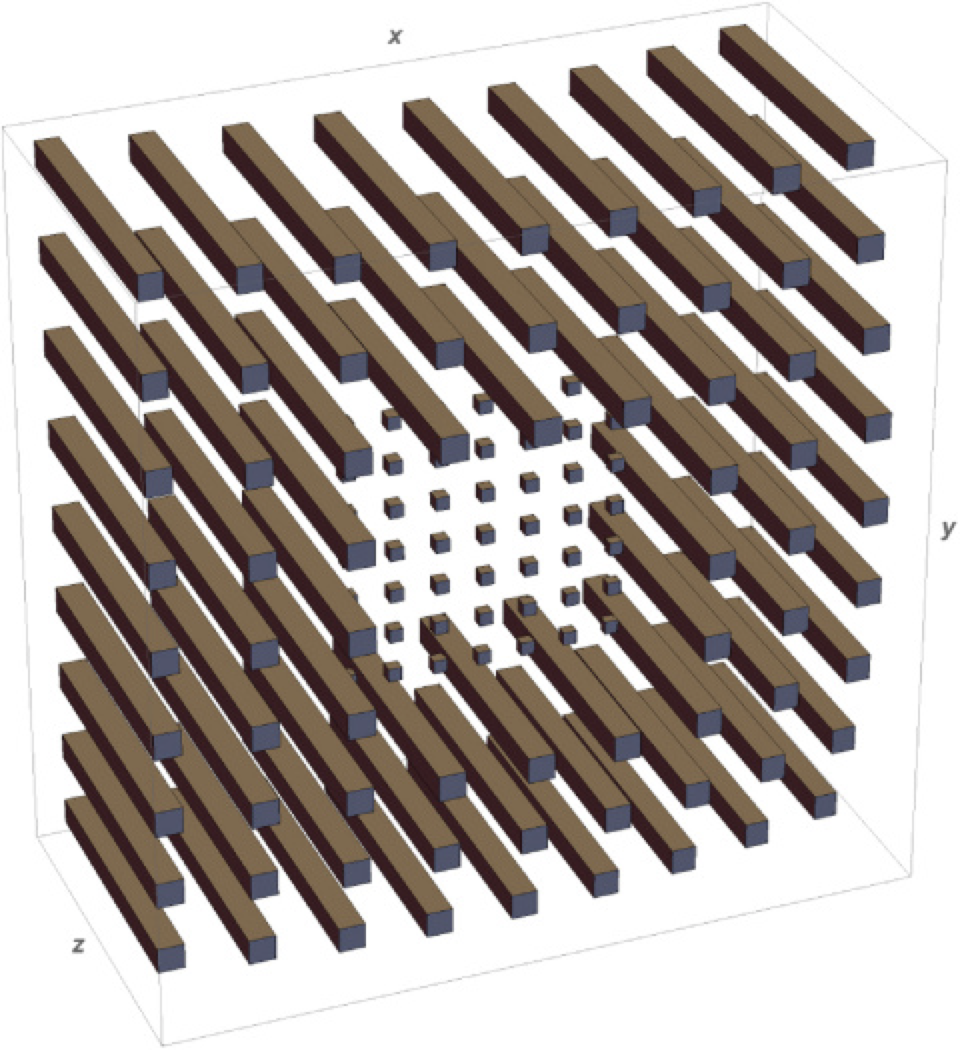

On-chip computing metasystems composed of multilayer metamaterials have the potential to become the next-generation computing hardware endowed with light-speed processing ability and low power consumption but are hindered by current design paradigms. To date, neither numerical nor analytical methods can balance efficiency and accuracy of the design process. To address the issue, a physics-inspired deep learning architecture termed electromagnetic neural network (EMNN) is proposed to enable an efficient, reliable, and flexible paradigm of inverse design. EMNN consists of two parts: EMNN Netlet serves as a local electromagnetic field solver; Huygens–Fresnel Stitch is used for concatenating local predictions. It can make direct, rapid, and accurate predictions of full-wave field based on input fields of arbitrary variations and structures of nonfixed size. With the aid of EMNN, we design computing metasystems that can perform handwritten digit recognition and speech command recognition. EMNN increases the design speed by 17,000 times than that of the analytical model and reduces the modeling error by two orders of magnitude compared to the numerical model. By integrating deep learning techniques with fundamental physical principle, EMNN manifests great interpretability and generalization ability beyond conventional networks. Additionally, it innovates a design paradigm that guarantees both high efficiency and high fidelity. Furthermore, the flexible paradigm can be applicable to the unprecedentedly challenging design of large-scale, high-degree-of-freedom, and functionally complex devices embodied by on-chip optical diffractive networks, so as to further promote the development of computing metasystems.

Latex Bibtex Citation:

Z. Wang, Y. Peng, L. Fang, L. Gao,

Optica, 2025.

Optical imaging has traditionally relied on hardware to fulfill its imaging function, producing output measures that mimic the original objects. Developed separately, digital algorithms enhance or analyze these visual representations, rather than being integral to the imaging process. The emergence of computational optical imaging has blurred the boundary between hardware and algorithm, incorporating computation in silico as an essential step in producing the final image. It provides additional degrees of freedom in system design and enables unconventional capabilities and greater efficiency. This mini-review surveys various perspectives of such interactions between physical and digital layers. It discusses the representative works where dedicated algorithms join the specialized imaging modalities or pipelines to achieve images of unprecedented quality. It also examines the converse scenarios where hardware, such as optical elements and sensors, is engineered to perform image processing, partially or fully replacing computer-based counterparts. Finally, the review highlights the emerging field of end-to-end optimization, where optics and algorithms are co-designed using differentiable models and task-specific loss functions. Together, these advancements provide an overview of the current landscape of computational optical imaging, delineating significant progress while uncovering diverse directions and potential in this rapidly evolving field.

Latex Bibtex Citation:

2024

Z. Xue, T. Zhou, Z. Xu, S. Yu, Q. Dai, and L. Fang,

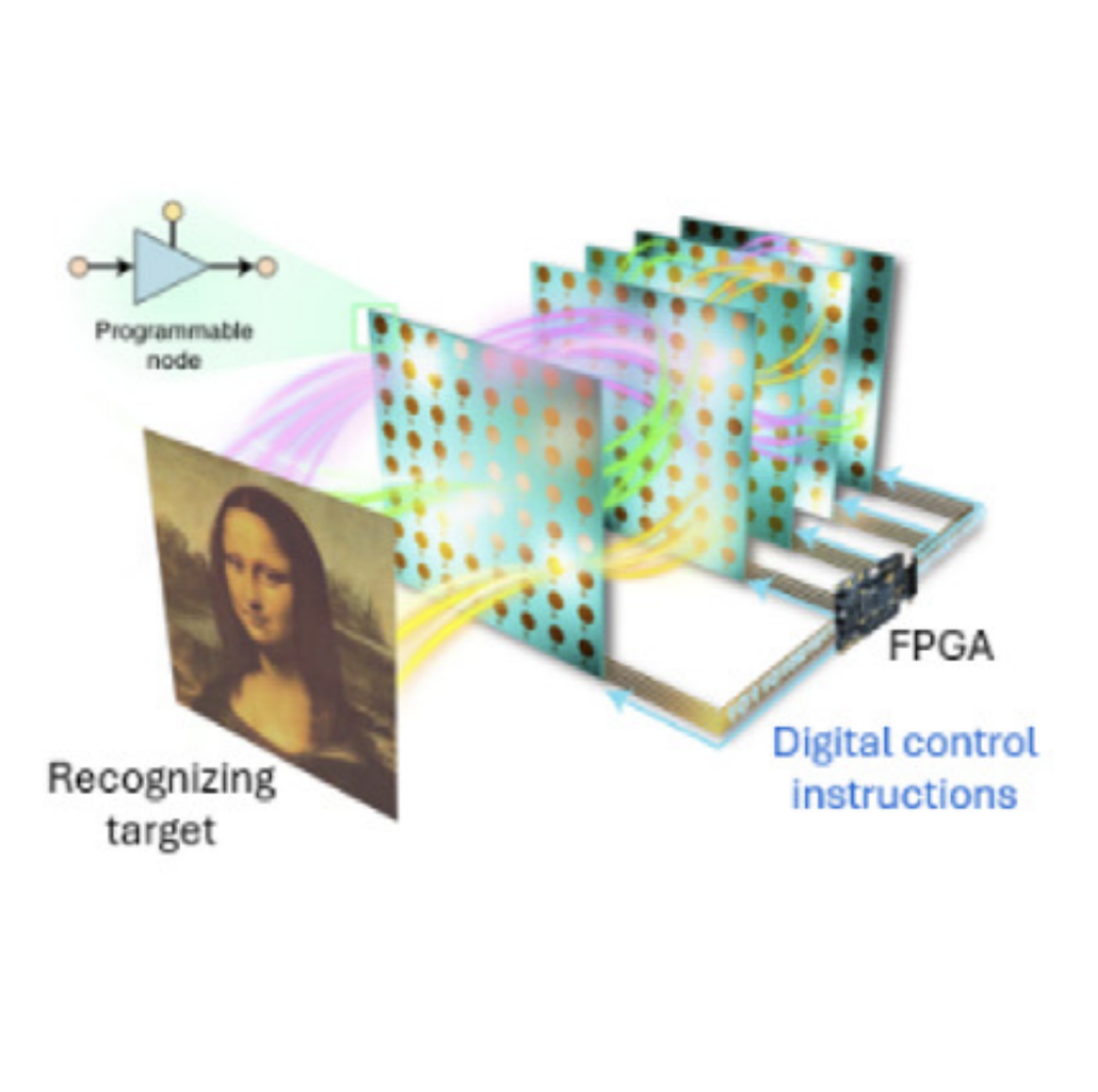

Nature, 2024.

Optical computing promises to improve the speed and energy efficiency of machine learning applications1–6. However, current approaches to efficiently train these models are limited by in silico emulation on digital computers. Here we develop a method called fully forward mode (FFM) learning, which implements the compute- intensive training process on the physical system. The majority of the machine learning operations are thus efficiently conducted in parallel on site, alleviating numerical modelling constraints. In free-space and integrated photonics, we experimentally demonstrate optical systems with state-of-the-art performances for a given network size. FFM learning shows training the deepest optical neural networks with millions of parameters achieves accuracy equivalent to the ideal model. It supports all-optical focusing through scattering media with a resolution of the diffraction limit; it can also image in parallel the objects hidden outside the direct line of sight at over a kilohertz frame rate and can conduct all-optical processing with light intensity as weak as subphoton per pixel (5.40 × 1018- operations-per-second- per-watt energy efficiency) at room temperature. Furthermore, we prove that FFM learning can automatically search non-Hermitian exceptional points without an analytical model. FFM learning not only facilitates orders-of-magnitude-faster learning processes, but can also advance applied and theoretical fields such as deep neural networks, ultrasensitive perception and topological photonics.

Latex Bibtex Citation:

Xue, Z., Zhou, T., Xu, Z. et al. Fully forward mode training for optical neural networks. Nature 632, 280–286 (2024). https://doi.org/10.1038/s41586-024-07687-4

Z. Xu, T. Zhou, M. Ma, C. Deng, Q. Dai, and L. Fang,

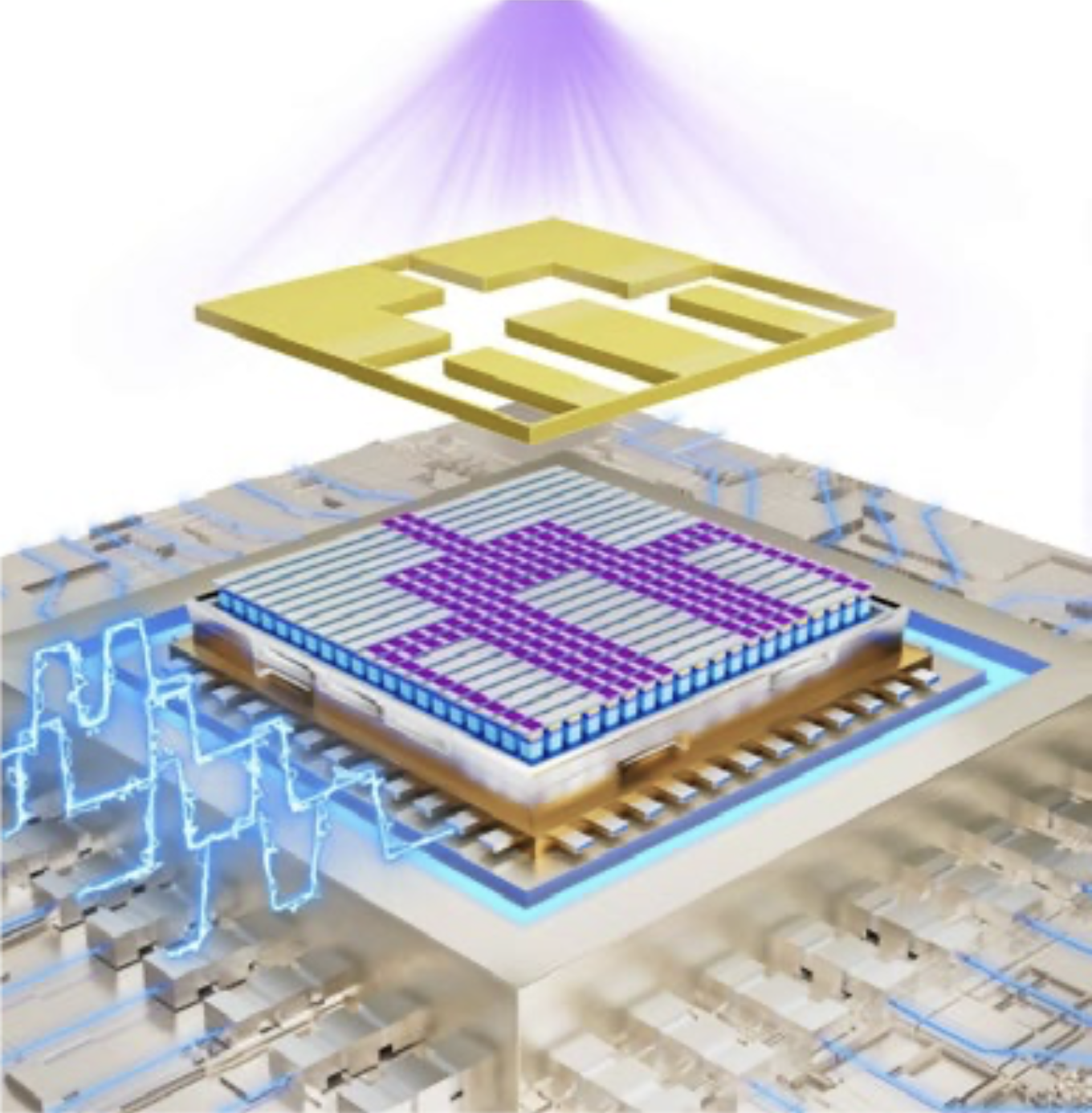

Science, 2024.

Latex Bibtex Citation:

@article{xu2024large,

title={Large-scale photonic chiplet Taichi empowers 160-TOPS/W artificial general intelligence},

author={Xu, Zhihao and Zhou, Tiankuang and Ma, Muzhou and Deng, ChenChen and Dai, Qionghai and Fang, Lu},

journal={Science},

volume={384},

number={6692},

pages={202--209},

year={2024},

publisher={American Association for the Advancement of Science}

}

Y. Guo, Y. Hao, S. Wan, H. Zhang, L. Zhu, Y. Zhang, J. Wu, Q. Dai, and L. Fang,

Nature Photonics, 2024.

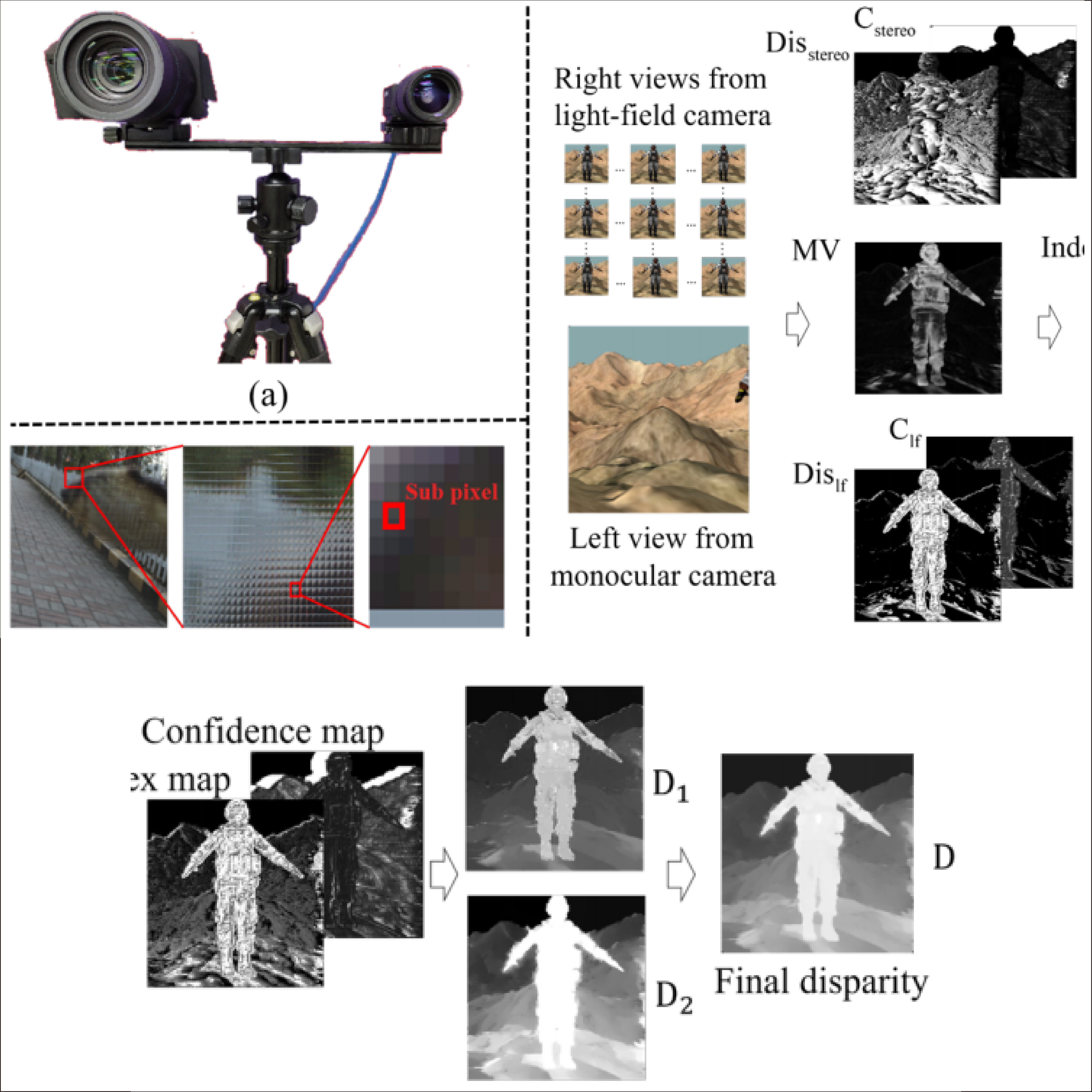

Turbulence is a complex and chaotic state of fuid motion. Atmospheric turbulence within the Earth’s atmosphere poses fundamental challenges for applications such as remote sensing, free-space optical communications and astronomical observation due to its rapid evolution across temporal and spatial scales. Conventional methods for studying atmospheric turbulence face hurdles in capturing the wide-feld distribution of turbulence due to its transparency and anisoplanatism. Here we develop a light-feld-based plug-and-play wide-feld wavefront sensor (WWS), facilitating the direct observation of atmospheric turbulence over 1,100 arcsec at 30 Hz. The experimental measurements agreed with the von Kármán turbulence model, further verifed using a diferential image motion monitor. Attached to an 80 cm telescope, our WWS enables clear turbulence profling of three layers below an altitude of 750 m and high-resolution aberration-corrected imaging without additional deformable mirrors. The WWS also enables prediction of the evolution of turbulence dynamics within 33 ms using a convolutional recurrent neural network with wide-feld measurements, leading to more accurate pre-compensation of turbulence-induced errors during free-space optical communication. Wide-feld sensing of dynamic turbulence wavefronts provides new opportunities for studying the evolution of turbulence in the broad feld of atmospheric optics.

Latex Bibtex Citation:

@article{guo2024direct,

title={Direct observation of atmospheric turbulence with a video-rate wide-field wavefront sensor},

author={Guo, Yuduo and Hao, Yuhan and Wan, Sen and Zhang, Hao and Zhu, Laiyu and Zhang, Yi and Wu, Jiamin and Dai, Qionghai and Fang, Lu},

journal={Nature Photonics},

pages={1--9},

year={2024},

publisher={Nature Publishing Group UK London}

}

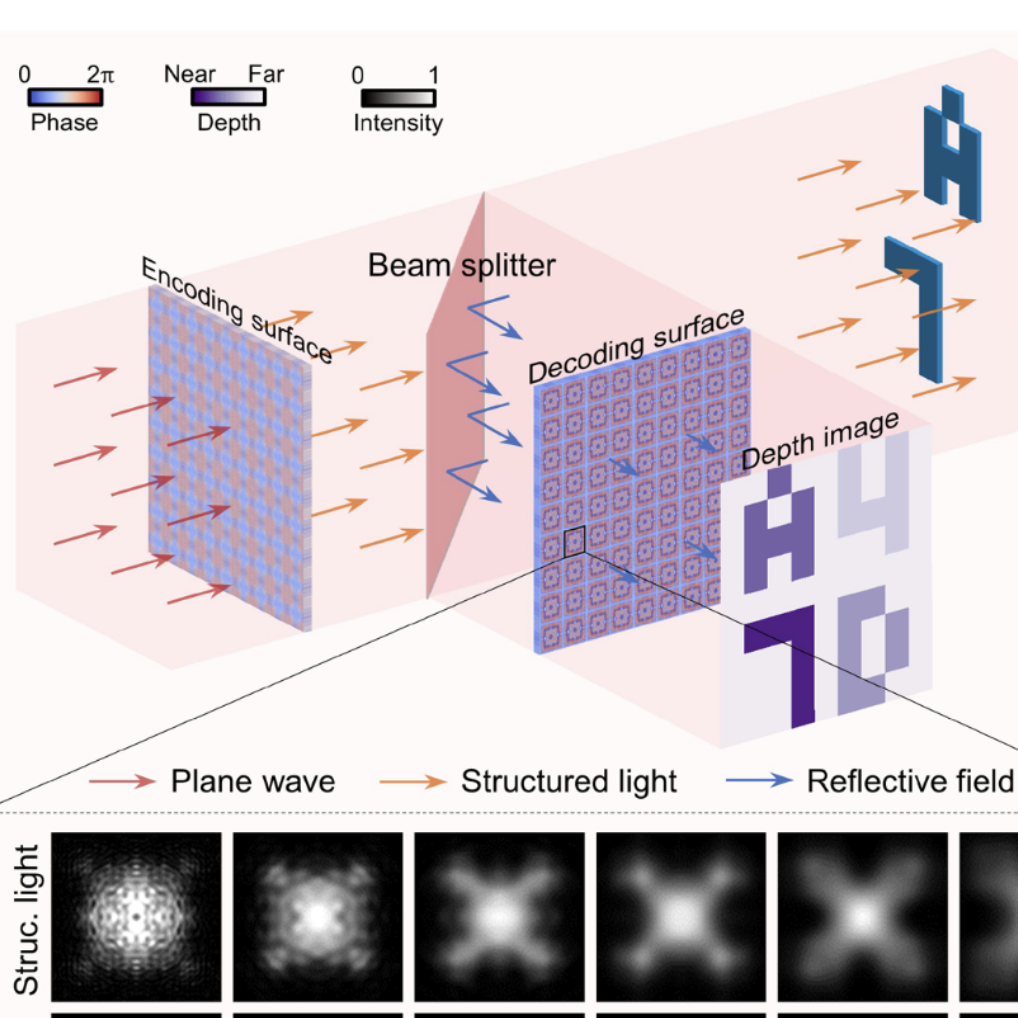

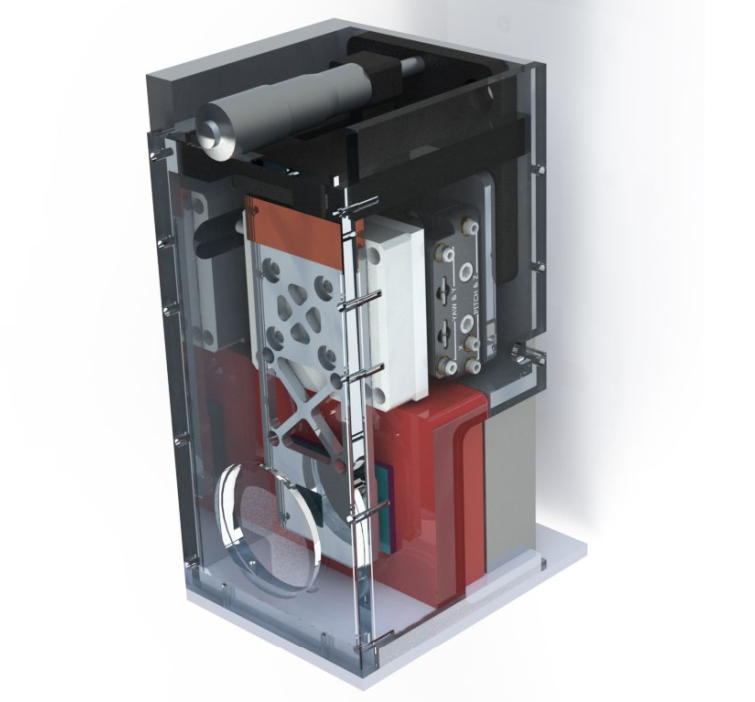

T. Yan, T. Zhou, Y. Guo, Y. Zhao, G. Shao, J. Wu, R. Huang, Q. Dai, and L. Fang,

Science Advances, 2024.

Three-dimensional (3D) perception is vital to drive mobile robotics’ progress toward intelligence. However, state-of-the-art 3D perception solutions require complicated postprocessing or point-by-point scanning, sufferingcomputational burden, latency of tens of milliseconds, and additional power consumption. Here, we propose aparallel all-optical computational chipset 3D perception architecture (Aop3D) with nanowatt power and lightspeed. The 3D perception is executed during the light propagation over the passive chipset, and the capturedlight intensity distribution provides a direct reflection of the depth map, eliminating the need for extensive post-processing. The prototype system of Aop3D is tested in various scenarios and deployed to a mobile robot, demon-strating unprecedented performance in distance detection and obstacle avoidance. Moreover, Aop3D works at aframe rate of 600 hertz and a power consumption of 33.3 nanowatts per meta-pixel experimentally. Our work ispromising toward next-generation direct 3D perception techniques with light speed and high energy efficiency.

Latex Bibtex Citation:

@article{yan2024nanowatt,

title={Nanowatt all-optical 3D perception for mobile robotics},

author={Yan, Tao and Zhou, Tiankuang and Guo, Yanchen and Zhao, Yun and Shao, Guocheng and Wu, Jiamin and Huang, Ruqi and Dai, Qionghai and Fang, Lu},

journal={Science Advances},

volume={10},

number={27},

pages={eadn2031},

year={2024},

publisher={American Association for the Advancement of Science}

}

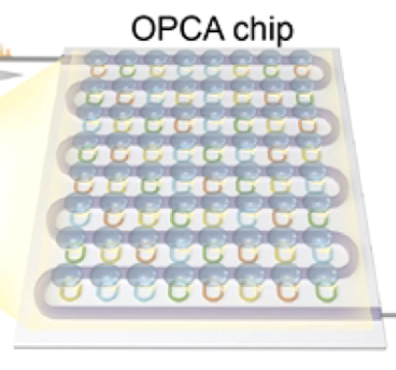

W. Wu, T. Zhou, and L. Fang,

OSA Optica, 2024.

Image processing, transmission, and reconstruction constitute a major proportion of information technology. The rapid expansion of ubiquitous edge devices and data centers has led to substantial demands on the bandwidth and efficiency of image processing, transmission, and reconstruction. The frequent conversion of serial signals between the optical and electrical domains, coupled with the gradual saturation of electronic processors, has become the bottleneck of end-toend machine vision. Here, we present an optical parallel computational array chip (OPCA chip) for end-to-end processing, transmission, and reconstruction of optical intensity images. By proposing constructive and destructive computing modes on the large-bandwidth resonant optical channels, a parallel computational model is constructed to implement end-to-end optical neural network computing. The OPCA chip features a measured response time of 6 ns and an optical bandwidth of at least 160 nm. Optical image processing can be efficiently executed with minimal energy consumption and latency, liberated from the need for frequent optical–electronic and analog–digital conversions. The proposed optical computational sensor opens the door to extremely high-speed processing, transmission, and reconstruction of visible contents with nanoseconds response time and terahertz bandwidth.

Latex Bibtex Citation:

@article{wu2024parallel,

title={Parallel photonic chip for nanosecond end-to-end image processing, transmission, and reconstruction},

author={Wu, Wei and Zhou, Tiankuang and Fang, Lu},

journal={Optica},

volume={11},

number={6},

pages={831--837},

year={2024},

publisher={Optica Publishing Group}

}

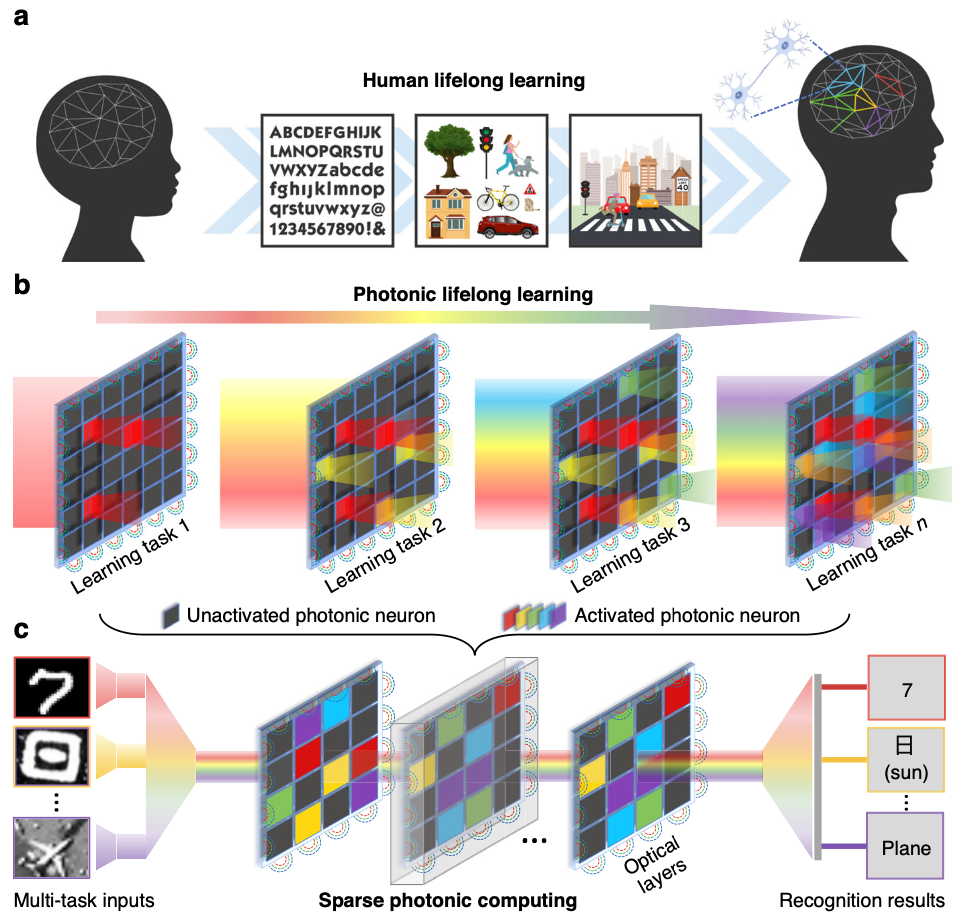

Y. Cheng, J. Zhang, T. Zhou, Y. Wang, Z. Xu, X. Yuan, and L. Fang,

Light: Science & Applications, 2024.

Scalable, high-capacity, and low-power computing architecture is the primary assurance for increasingly manifold and large-scale machine learning tasks. Traditional electronic artificial agents by conventional power-hungry processors have faced the issues of energy and scaling walls, hindering them from the sustainable performance improvement and iterative multi-task learning. Referring to another modality of light, photonic computing has been progressively applied in high-efficient neuromorphic systems. Here, we innovate a reconfigurable lifelong-learning optical neural network (L2ONN), for highly-integrated tens-of-task machine intelligence with elaborated algorithm-hardware co-design. Benefiting from the inherent sparsity and parallelism in massive photonic connections, L2ONN learns each single task by adaptively activating sparse photonic neuron connections in the coherent light field, while incrementally acquiring expertise on various tasks by gradually enlarging the activation. The multi-task optical features are parallelly processed by multi-spectrum representations allocated with different wavelengths. Extensive evaluations on free-space and on-chip architectures confirm that for the first time, L2ONN avoided the catastrophic forgetting issue of photonic computing, owning versatile skills on challenging tens-of-tasks (vision classification, voice recognition, medical diagnosis, etc.) with a single model. Particularly, L2ONN achieves more than an order of magnitude higher efficiency than the representative electronic artificial neural networks, and 14× larger capacity than existing optical neural networks while maintaining competitive performance on each individual task. The proposed photonic neuromorphic architecture points out a new form of lifelong learning scheme, permitting terminal/edge AI systems with light-speed efficiency and unprecedented scalability.

Latex Bibtex Citation:

@article{cheng2024photonic,

title={Photonic neuromorphic architecture for tens-of-task lifelong learning},

author={Cheng, Yuan and Zhang, Jianing and Zhou, Tiankuang and Wang, Yuyan and Xu, Zhihao and Yuan, Xiaoyun and Fang, Lu},

journal={Light: Science \& Applications},

volume={13},

number={1},

pages={56},

year={2024},

publisher={Nature Publishing Group UK London}

}

T. Ma, B. Bai, H. Lin, H. Wang, Y. Wang, L. Luo, and L. Fang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2024.

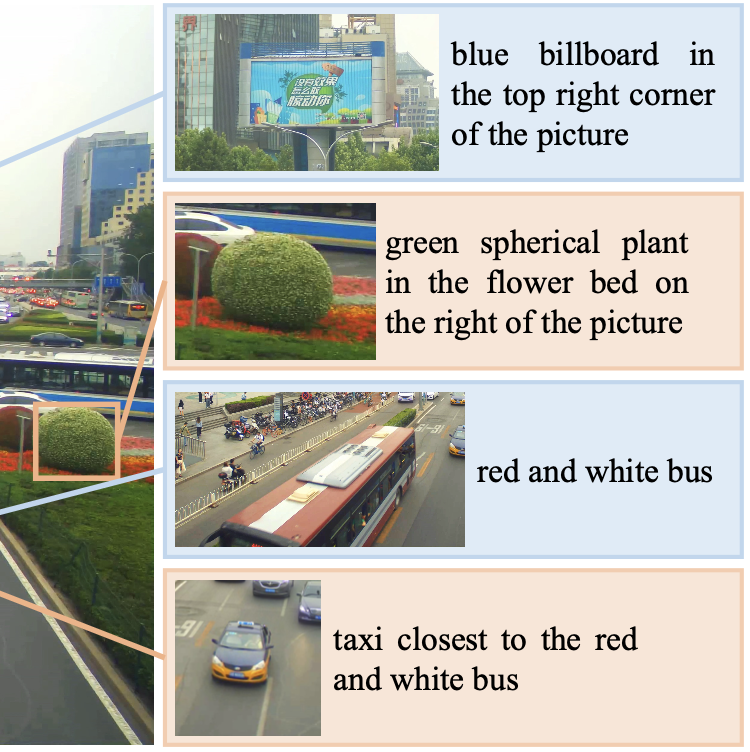

Visual grounding refers to the process of associating

natural language expressions with corresponding regions

within an image. Existing benchmarks for visual grounding

primarily operate within small-scale scenes with a few objects. Nevertheless, recent advances in imaging technology

have enabled the acquisition of gigapixel-level images, providing high-resolution details in large-scale scenes containing numerous objects. To bridge this gap between imaging

and computer vision benchmarks and make grounding more

practically valuable, we introduce a novel dataset, named

GigaGrounding, designed to challenge visual grounding

models in gigapixel-level large-scale scenes. We extensively

analyze and compare the dataset with existing benchmarks,

demonstrating that GigaGrounding presents unique challenges such as large-scale scene understanding, gigapixel-level resolution, significant variations in object scales, and

the “multi-hop expressions”. Furthermore, we introduced

a simple yet effective grounding approach, which employs

a “glance-to-zoom-in” paradigm and exhibits enhanced

capabilities for addressing the GigaGrounding task. The dataset is available at www.gigavision.ai.

Latex Bibtex Citation:

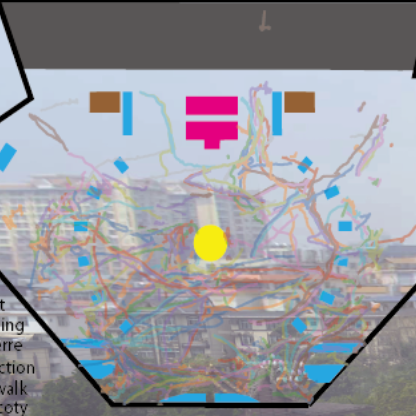

H. Lin, C. Wei, Y. Guo, L. He, Y. Zhao, S. Li, and L. Fang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2024.

Pedestrian trajectory prediction is a well-established task with significant recent advancements. However, existing datasets are unable to fulfill the demand for studying minute-level long-term trajectory prediction, mainly due to the lack of high-resolution trajectory observation in the wide field of view (FoV). To bridge this gap, we introduce a novel dataset named GigaTraj, featuring videos capturing a wide FoV with ∼4 × 104 m2 and high-resolution imagery at the gigapixel level. Furthermore, GigaTraj includes comprehensive annotations such as bounding boxes, identity associations, world coordinates, group/interaction relationships, and scene semantics. Leveraging these multimodal annotations, we evaluate and validate the state-of-the-art approaches for minute-level long-term trajectory prediction in large-scale scenes. Extensive experiments and analyses have revealed that long-term prediction for pedestrian trajectories presents numerous challenges, indicating a vital new direction for trajectory research. The dataset is available at www.gigavision.ai.

Latex Bibtex Citation:

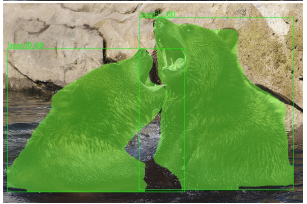

H. Ying, Y. Yin, J. Zhang, F. Wang, T. Yu, R. Huang, and L. Fang,

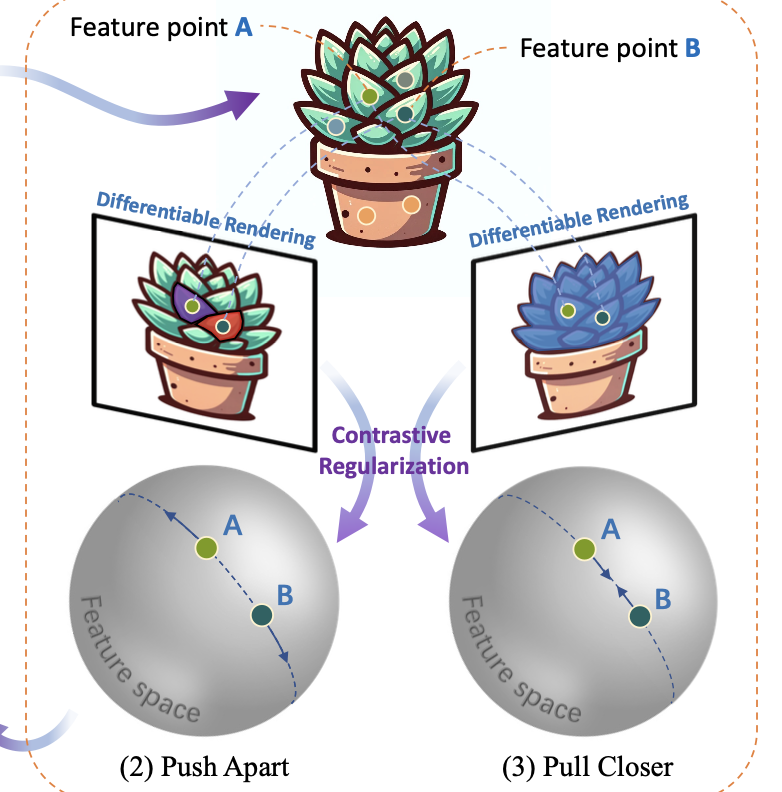

Proc. of Computer Vision and Pattern Recognition (CVPR), 2024.

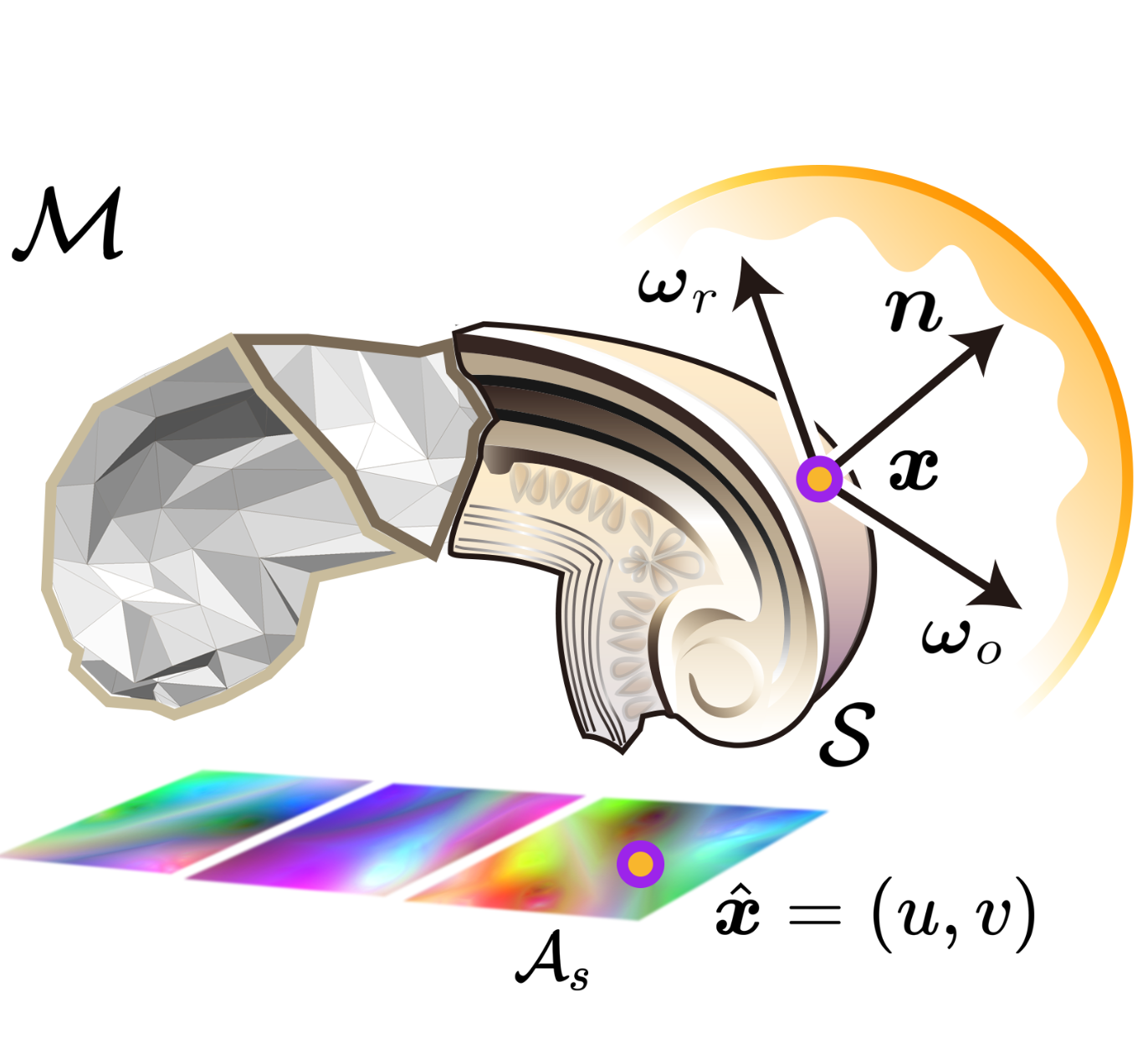

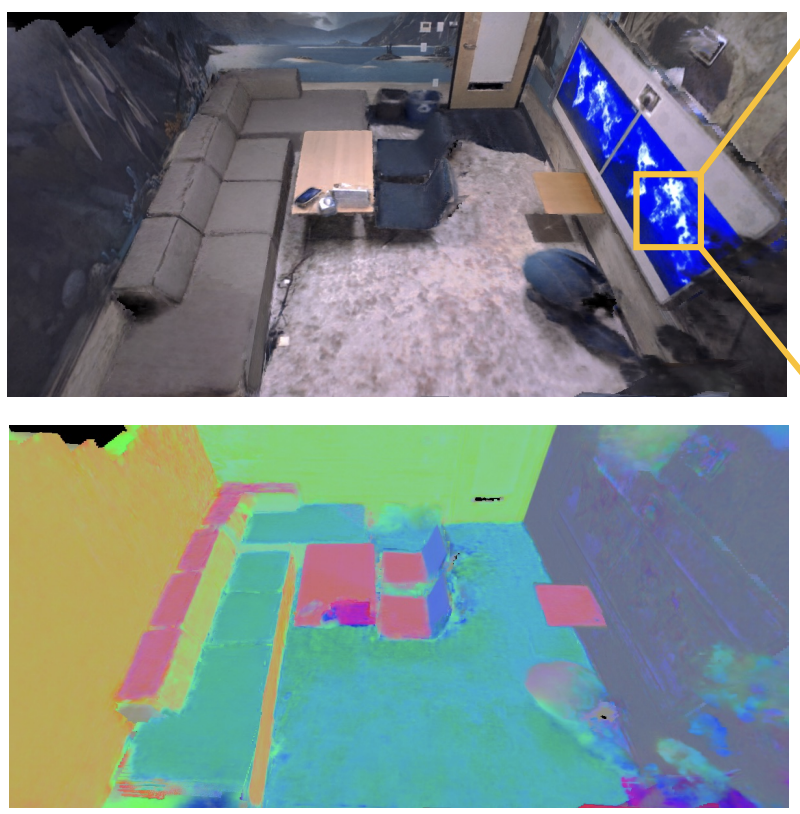

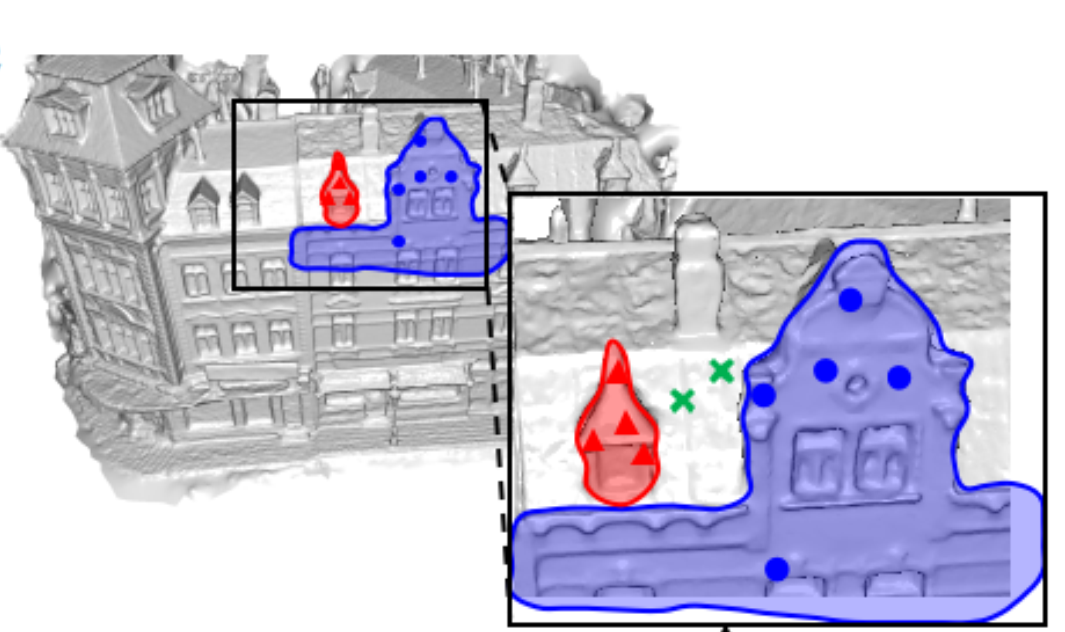

Towards holistic understanding of 3D scenes, a general 3D segmentation method is needed that can segment diverse objects without restrictions on object quantity or categories, while also reflecting the inherent hierarchical structure. To achieve this, we propose OmniSeg3D, an omniversal segmentation method aims for segmenting anything in 3D all at once. The key insight is to lift multi-view inconsistent 2D segmentations into a consistent 3D feature field through a hierarchical contrastive learning framework, which is accomplished by two steps. Firstly, we design a novel hierarchical representation based on category-agnostic 2D segmentations to model the multi-level relationship among pixels. Secondly, image features rendered from the 3D feature field are clustered at different levels, which can be further drawn closer or pushed apart according to the hierarchical relationship between different levels. In tackling the challenges posed by inconsistent 2D segmentations, this framework yields a global consistent 3D feature field, which further enables hierarchical segmentation, multi-object selection, and global discretization. Extensive experiments demonstrate the effectiveness of our method on high-quality 3D segmentation and accurate hierarchical structure understanding. A graphical user interface further facilitates flexible interaction for omniversal 3D segmentation.

Latex Bibtex Citation:

@misc{ying2023omniseg3d,

title={OmniSeg3D: Omniversal 3D Segmentation via Hierarchical Contrastive Learning},

author={Haiyang Ying and Yixuan Yin and Jinzhi Zhang and Fan Wang and Tao Yu and Ruqi Huang and Lu Fang},

year={2023},

eprint={2311.11666},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

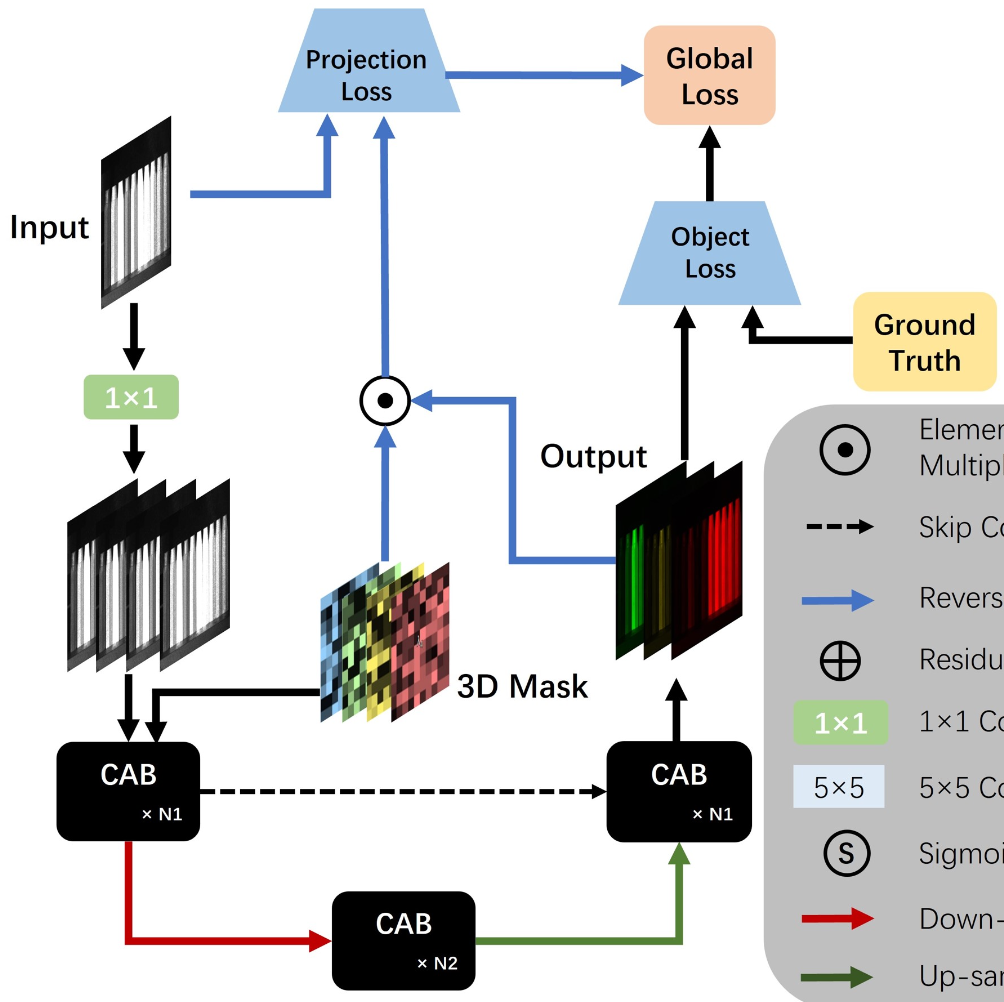

Z. Yao, S. Liu, X. Yuan, and L. Fang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2024.

Compressive spectral image reconstruction is a critical method for acquiring images with high spatial and spectral resolution. Current advanced methods, which involve designing deeper networks or adding more self-attention modules, are limited by the scope of attention modules and the irrelevance of attentions across different dimensions. This leads to difficulties in capturing non-local mutation features in the spatial-spectral domain and results in a significant parameter increase but only limited performance improvement. To address these issues, we propose SPECAT, a SPatial-spEctral Cumulative-Attention Transformer designed for high-resolution hyperspectral image reconstruction. SPECAT utilizes Cumulative-Attention Blocks (CABs) within an efficient hierarchical framework to extract features from non-local spatial-spectral details. Furthermore, it employs a projection-object Dual-domain Loss Function (DLF) to integrate the optical path constraint, a physical aspect often overlooked in current methodologies. Ultimately, SPECAT not only significantly enhances the reconstruction quality of spectral details but also breaks through the bottleneck of mutual restriction between the cost and accuracy in existing algorithms. Our experimental results demonstrate the superiority of SPECAT, achieving 40.3 dB in hyperspectral reconstruction benchmarks, outperforming the state-of-the-art (SOTA) algorithms by 1.2 dB while using only 5% of the network parameters and 10% of the computational cost. The code is available at https://github.com/THU-luvision/SPECAT.

Latex Bibtex Citation:

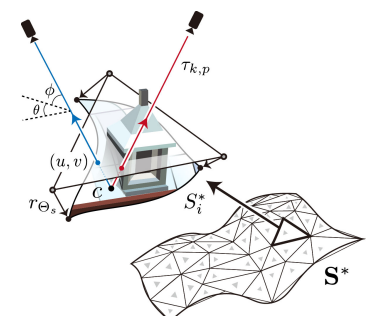

G. Wang, J. Zhang, F. Wang, R. Huang, and L. Fang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2024.

We propose XScale-NVS for high-fidelity cross-scale

novel view synthesis of real-world large-scale scenes. Existing representations based on explicit surface suffer from

discretization resolution or UV distortion, while implicit

volumetric representations lack scalability for large scenes

due to the dispersed weight distribution and surface ambiguity. In light of the above challenges, we introduce hash

featurized manifold, a novel hash-based featurization coupled with a deferred neural rendering framework. This approach fully unlocks the expressivity of the representation

by explicitly concentrating the hash entries on the 2D manifold, thus effectively representing highly detailed contents

independent of the discretization resolution. We also introduce a novel dataset, namely GigaNVS, to benchmark

cross-scale, high-resolution novel view synthesis of real-world large-scale scenes. Our method significantly outper-

forms competing baselines on various real-world scenes,

yielding an average LPIPS that is ∼ 40% lower than prior

state-of-the-art on the challenging GigaNVS benchmark.

Please see our project page at: xscalenvs.github.io.

Latex Bibtex Citation:

@misc{wang2024xscalenvs,

title={XScale-NVS: Cross-Scale Novel View Synthesis with Hash Featurized Manifold},

author={Guangyu Wang and Jinzhi Zhang and Fan Wang and Ruqi Huang and Lu Fang},

year={2024},

eprint={2403.19517},

archivePrefix={arXiv},

primaryClass={cs.CV} }

2023

Y. Chen, M. Nazhamati, H. Xu, Y. Meng, T. Zhou, G. Li, J. Fan, Q. Wei, J. Wu, F. Qiao, L. Fang, and Q. Dai,

Nature, 2023.

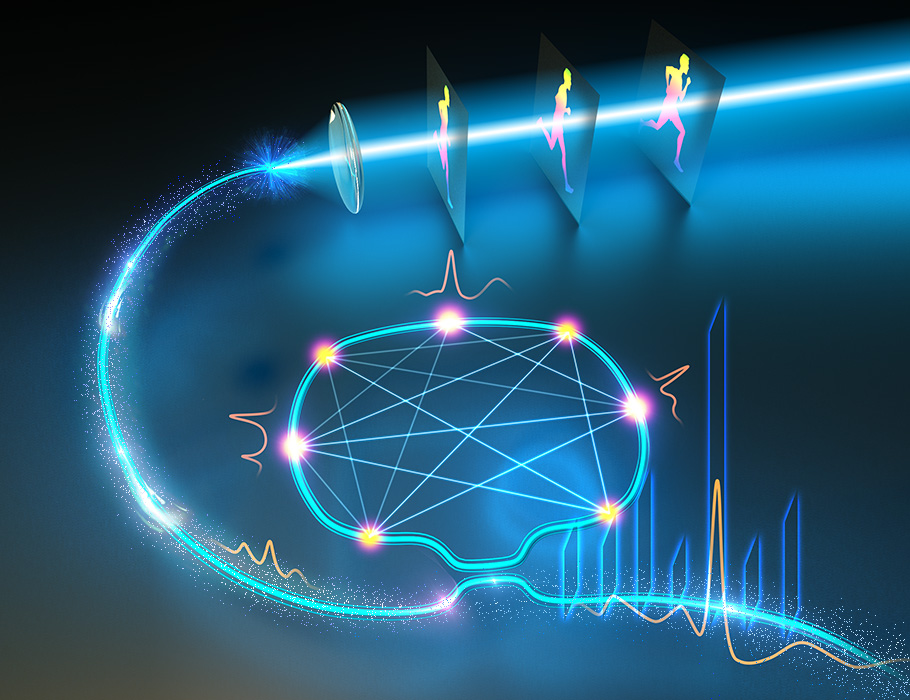

Photonic computing enables faster and more energy-efficient processing of vision

data. However, experimental superiority of deployable systems remains a challenge

because of complicated optical nonlinearities, considerable power consumption of analog-to-digital converters (ADCs) for downstream digital processing and

vulnerability to noises and system errors. Here we propose an all-analog chip

combining electronic and light computing (ACCEL). It has a systemic energy efficiency

of 74.8 peta-operations per second per watt and a computing speed of 4.6 peta-

operations per second (more than 99% implemented by optics), corresponding to

more than three and one order of magnitude higher than state-of-the-art computing

processors, respectively. After applying diffractive optical computing as an optical

encoder for feature extraction, the light-induced photocurrents are directly used for

further calculation in an integrated analog computing chip without the requirement

of analog-to-digital converters, leading to a low computing latency of 72 ns for each

frame. With joint optimizations of optoelectronic computing and adaptive training,

ACCEL achieves competitive classification accuracies of 85.5%, 82.0% and 92.6%,

respectively, for Fashion-MNIST, 3-class ImageNet classification and time-lapse video

recognition task experimentally, while showing superior system robustness in low-

light conditions (0.14 fJ μm−2 each frame). ACCEL can be used across a broad range of

applications such as wearable devices, autonomous driving and industrial inspections.

Latex Bibtex Citation:

@article{chen2023all,

title={All-analog photoelectronic chip for high-speed vision tasks},

author={Chen, Yitong and Nazhamaiti, Maimaiti and Xu, Han and Meng, Yao and Zhou, Tiankuang and Li, Guangpu and Fan, Jingtao and Wei, Qi and Wu, Jiamin and Qiao, Fei and others},

journal={Nature},

pages={1--10},

year={2023},

publisher={Nature Publishing Group UK London}

}

X. Yuan, Y. Wang, Z. Xu, T. Zhou, and L. Fang,

Nature Communications, 2023.

Optoelectronic neural networks (ONN) are a promising avenue in AI com- puting due to their potential for parallelization, power efficiency, and speed. Diffractive neural networks, which process information by propagating encoded light through trained optical elements, have garnered interest. However, training large-scale diffractive networks faces challenges due to the computational and memory costs of optical diffraction modeling. Here, we present DANTE, a dual-neuron optical-artificial learning architecture. Optical neurons model the optical diffraction, while artificial neurons approximate the intensive optical-diffraction computations with lightweight functions. DANTE also improves convergence by employing iterative global artificial-learning steps and local optical-learning steps. In simulation experiments, DANTE successfully trains large-scale ONNs with 150 million neurons on ImageNet, previously unattainable, and accelerates training speeds significantly on the CIFAR-10 benchmark compared to single-neuron learning. In physical experi- ments, we develop a two-layer ONN system based on DANTE, which can effectively extract features to improve the classification of natural images.

Latex Bibtex Citation:

@article{yuan2023training,

title={Training large-scale optoelectronic neural networks with dual-neuron optical-artificial learning},

author={Yuan, Xiaoyun and Wang, Yong and Xu, Zhihao and Zhou, Tiankuang and Fang, Lu},

journal={Nature Communications},

volume={14},

number={1},

pages={7110},

year={2023},

publisher={Nature Publishing Group UK London}

}

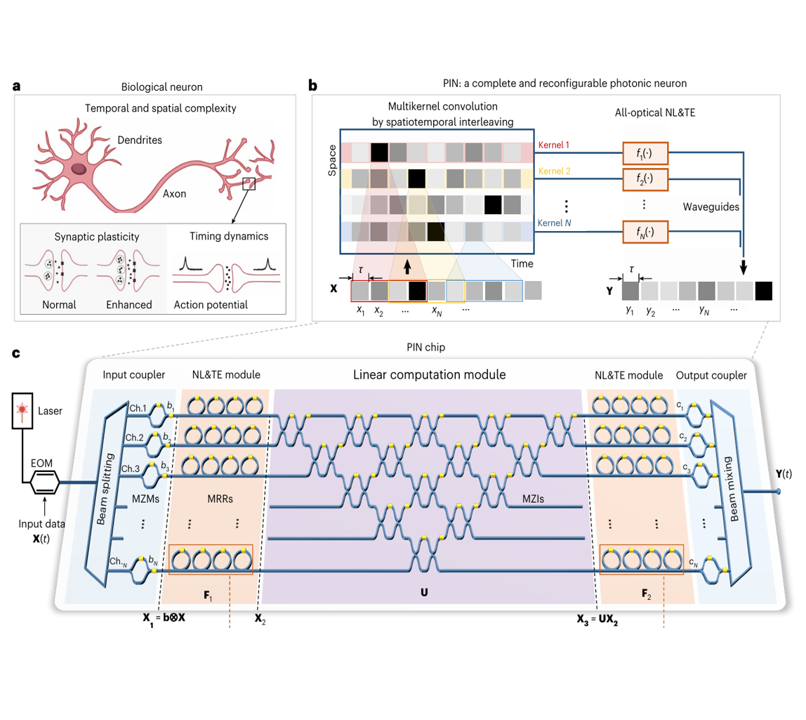

T. Zhou, W. Wu, J. Zhang, S. Yu, and L. Fang,

Science Advances, 2023.

Ultrafast dynamic machine vision in the optical domain can provide unprecedented perspectives for high-performance computing. However, owing to the limited degrees of freedom, existing photonic computing approaches rely on the memory’s slow read/write operations to implement dynamic processing. Here, we propose a spatiotemporal photonic computing architecture to match the highly parallel spatial computing with high-speed temporal computing and achieve a three-dimensional spatiotemporal plane. A unified training framework is devised to optimize the physical system and the network model. The photonic processing speed of the benchmark video dataset is increased by 40-fold on a space-multiplexed system with 35-fold fewer parameters. A wavelength-multiplexed system realizes all-optical nonlinear computing of dynamic light field with a frame time of 3.57 nanoseconds. The proposed architecture paves the way for ultrafast advanced machine vision free from the limits of memory wall and will find applications in unmanned systems, autonomous driving, ultrafast science, etc.

Latex Bibtex Citation:

@article{zhou2023ultrafast,

title={Ultrafast dynamic machine vision with spatiotemporal photonic computing},

author={Zhou, Tiankuang and Wu, Wei and Zhang, Jinzhi and Yu, Shaoliang and Fang, Lu},

journal={Science Advances},

volume={9},

number={23},

pages={eadg4391},

year={2023},

publisher={American Association for the Advancement of Science}

}

Y. Chen, T. Zhou, J. Wu, H. Qiao, X. Lin, L. Fang, and Q. Dai,

Science Advances, 2023.

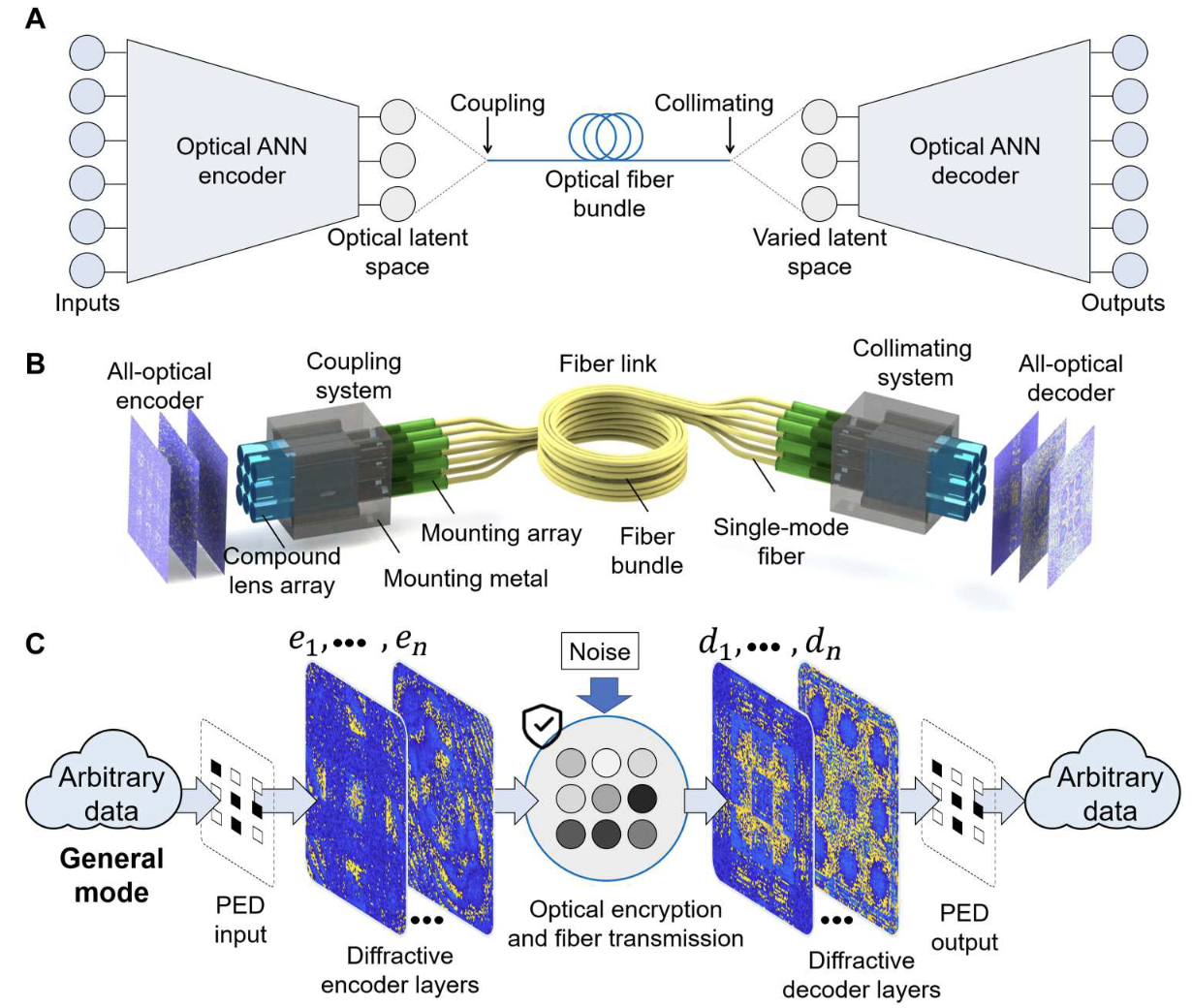

Following the explosive growth of global data, there is an ever-increasing demand for high-throughput processing in image transmission systems. However, existing methods mainly rely on electronic circuits, which severely limits the transmission throughput. Here, we propose an end-to-end all-optical variational autoencoder, named photonic encoder-decoder (PED), which maps the physical system of image transmission into an optical generative neural network. By modeling the transmission noises as the variation in optical latent space, the PED establishes a large-scale high-throughput unsupervised optical computing framework that integrates main computations in image transmission, including compression, encryption, and error correction to the optical domain. It reduces the system latency of computation by more than four orders of magnitude compared with the state-of-the-art devices and transmission error ratio by 57% than on-off keying. Our work points to the direction for a wide range of artificial intelligence–based physical system designs and next-generation communications.

Latex Bibtex Citation:

@article{chen2023photonic,

title={Photonic unsupervised learning variational autoencoder for high-throughput and low-latency image transmission},

author={Chen, Yitong and Zhou, Tiankuang and Wu, Jiamin and Qiao, Hui and Lin, Xing and Fang, Lu and Dai, Qionghai},

journal={Science Advances},

volume={9},

number={7},

pages={eadf8437},

year={2023},

publisher={American Association for the Advancement of Science}

}

Y. Zhang, G. Zhang, X. Han, J. Wu, Z. Li, X. Li, G. Xiao, H. Xie, L. Fang, and Q. Dai,

Nature Methods, 2023.

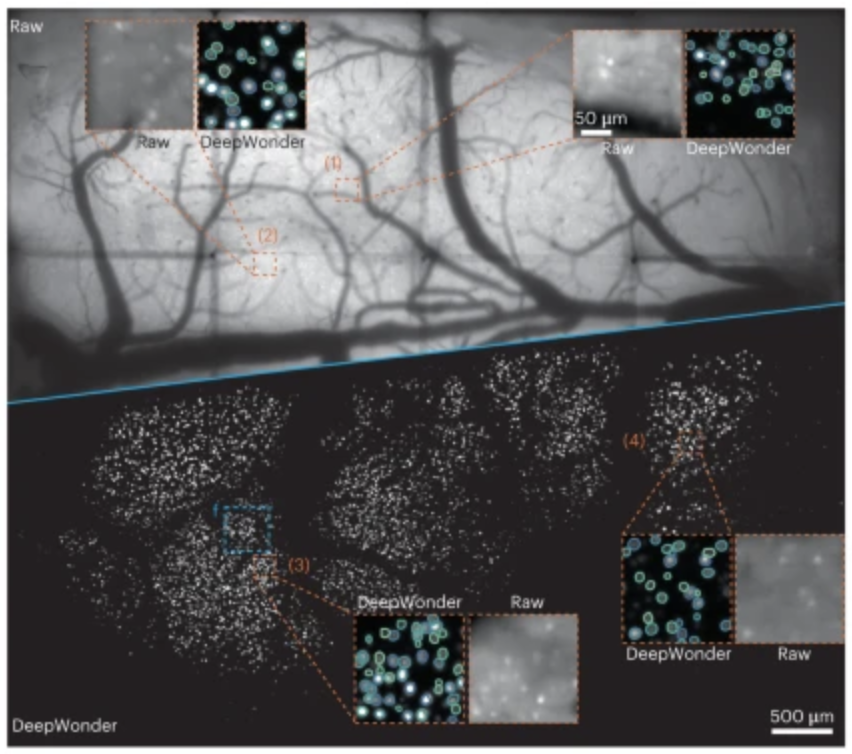

Widefeld microscopy can provide optical access to multi-millimeter felds of view and thousands of neurons in mammalian brains at video rate. However, tissue scattering and background contamination results in signal deterioration, making the extraction of neuronal activity challenging, laborious and time consuming. Here we present our deep-learning-based widefeld neuron fnder (DeepWonder), which is trained by simulated functional recordings and efectively works on experimental data to achieve high-fdelity neuronal extraction. Equipped with systematic background contribution priors, DeepWonder conducts neuronal inference with an order-of-magnitude-faster speed and improved accuracy compared with alternative approaches. DeepWonder removes background contaminations and is computationally efcient. Specifcally, DeepWonder accomplishes 50-fold signal-to-background ratio enhancement when processing terabytes-scale cortex-wide functional recordings, with over 14,000 neurons extracted in 17 h.

Latex Bibtex Citation:

@article{zhang2023rapid,

title={Rapid detection of neurons in widefield calcium imaging datasets after training with synthetic data},

author={Zhang, Yuanlong and Zhang, Guoxun and Han, Xiaofei and Wu, Jiamin and Li, Ziwei and Li, Xinyang and Xiao, Guihua and Xie, Hao and Fang, Lu and Dai, Qionghai},

journal={Nature Methods},

pages={1--8},

year={2023},

publisher={Nature Publishing Group US New York}

}

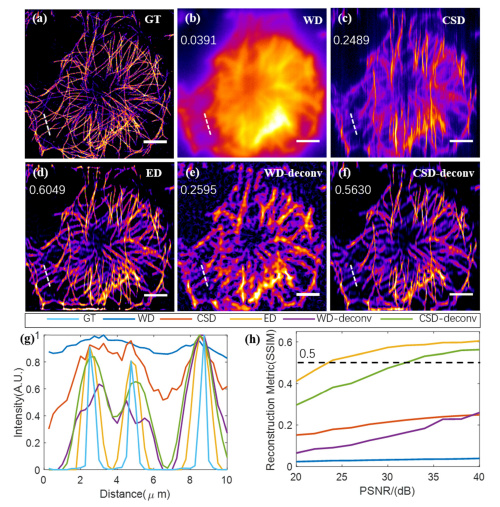

Y. Zhang, X. Song, J. Xie, J. Hu, J. Chen, X. Li, H. Zhang, Q. Zhou, L. Yuan, C. Kong, Y. Shen, J. Wu, L. Fang, and Q. Dai,

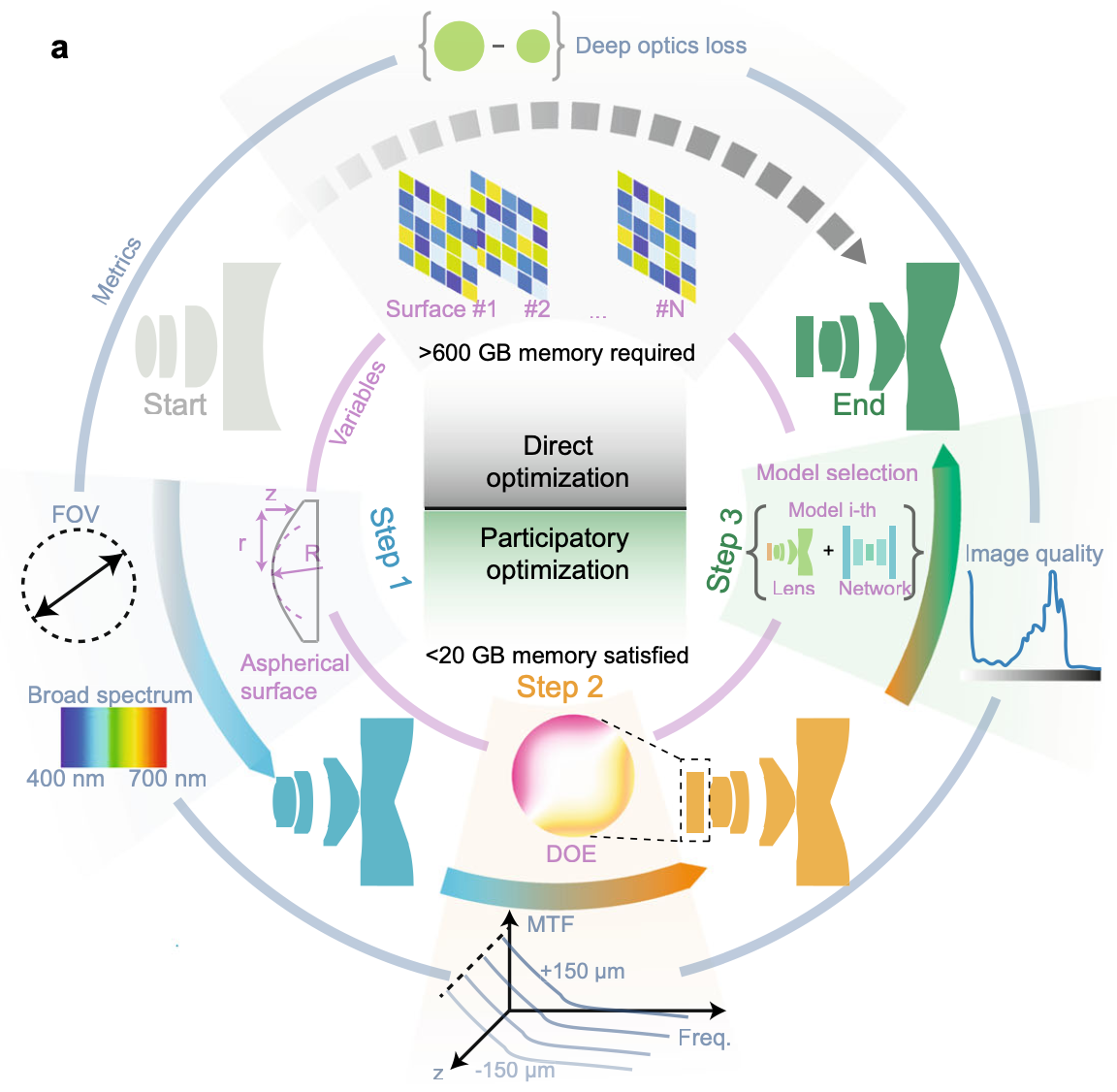

Nature Communications, 2023.

The optical microscope is customarily an instrument of substantial size and

expense but limited performance. Here we report an integrated microscope

that achieves optical performance beyond a commercial microscope with a 5×,

NA 0.1 objective but only at 0.15 cm^3 and 0.5 g, whose size is five orders of

magnitude smaller than that of a conventional microscope. To achieve this, a

progressive optimization pipeline is proposed which systematically optimizes

both aspherical lenses and diffractive optical elements with over 30 times

memory reduction compared to the end-to-end optimization. By designing a

simulation-supervision deep neural network for spatially varying deconvolu-

tion during optical design, we accomplish over 10 times improvement in the

depth-of-field compared to traditional microscopes with great generalization

in a wide variety of samples. To show the unique advantages, the integrated

microscope is equipped in a cell phone without any accessories for the

application of portable diagnostics. We believe our method provides a new

framework for the design of miniaturized high-performance imaging systems

by integrating aspherical optics, computational optics, and deep learning.

Latex Bibtex Citation:

@article{zhang2023large,

title={Large depth-of-field ultra-compact microscope by progressive optimization and deep learning},

author={Zhang, Yuanlong and Song, Xiaofei and Xie, Jiachen and Hu, Jing and Chen, Jiawei and Li, Xiang and Zhang, Haiyu and Zhou, Qiqun and Yuan, Lekang and Kong, Chui and others},

journal={Nature Communications},

volume={14},

number={1},

pages={4118},

year={2023},

publisher={Nature Publishing Group UK London}

}

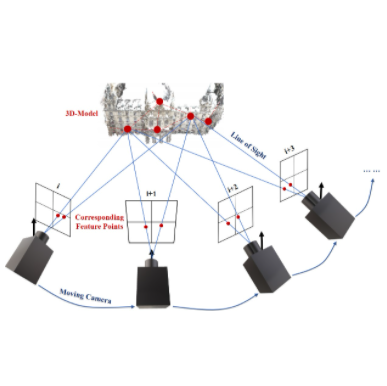

G. Wang, J. Zhang, K. Zhang, R. Huang, and L. Fang,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), Oct. 2023.

The rapid advances of high-performance sensation empowered gigapixel-level imaging/videography for large-scale scenes, yet the abundant details in gigapixel images were rarely valued in 3d reconstruction solutions. Bridging the gap between the sensation capacity and that of reconstruction requires to attack the large-baseline challenge imposed by the large-scale scenes, while utilizing the high-resolution details provided by the gigapixel images. This paper introduces GiganticNVS for gigapixel large-scale novel view synthesis (NVS). Existing NVS methods suffer from excessively blurred artifacts and fail on the full exploitation of image resolution, due to their inefficacy of recovering a faithful underlying geometry and the dependence on dense observations to accurately interpolate radiance. Our key insight is that, a highly-expressive implicit field with view-consistency is critical for synthesizing high-fidelity details from large-baseline observations. In light of this, we propose meta-deformed manifold, where meta refers to the locally defined surface manifold whose geometry and appearance are embedded into high-dimensional latent space. Technically, meta can be decoded as neural fields using an MLP (i.e., implicit representation). Upon this novel representation, multi-view geometric correspondence can be effectively enforced with featuremetric deformation and the reflectance field can be learned purely on the surface. Experimental results verify that the proposed method outperforms state-of-the-art methods both quantitatively and qualitatively, not only on the standard datasets containing complex real-world scenes with large baseline angles, but also on the challenging gigapixel-level ultra-large-scale benchmarks.

Latex Bibtex Citation:

@ARTICLE {10274871,

author = {G. Wang and J. Zhang and K. Zhang and R. Huang and L. Fang},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

title = {GiganticNVS: Gigapixel Large-scale Neural Rendering with Implicit Meta-deformed Manifold},

year = {2023},

volume = {},

number = {01},

issn = {1939-3539},

pages = {1-15},

doi = {10.1109/TPAMI.2023.3323069},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

month = {oct}

}

X. Chen, S. Xu, X. Zou, T. Cao, D. Yeung and L. Fang,

Proc. of IEEE International Conference on Computer Vision (ICCV), 2023.

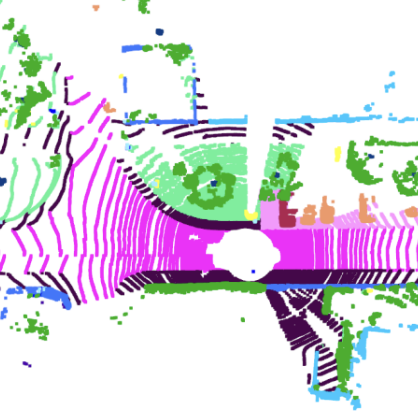

LiDAR-based semantic perception tasks are critical yet challenging for autonomous driving. Due to the motion of objects and static/dynamic occlusion, temporal information plays an essential role in reinforcing perception by enhancing and completing single-frame knowledge. Previous approaches either directly stack historical frames to the current frame or build a 4D spatio-temporal neighborhood using KNN, which duplicates computation and hinders realtime performance. Based on our observation that stacking all the historical points would damage performance due to a large amount of redundant and misleading information, we propose the Sparse Voxel-Adjacent Query Network (SVQNet) for 4D LiDAR semantic segmentation. To take full advantage of the historical frames high-efficiently, we shunt the historical points into two groups with reference to the current points. One is the Voxel-Adjacent Neighborhood carrying local enhancing knowledge. The other is the Historical Context completing the global knowledge. Then we propose new modules to select and extract the instructive features from the two groups. Our SVQNet achieves state-of-the-art performance in LiDAR semantic segmentation of the SemanticKITTI benchmark and the nuScenes dataset.

Latex Bibtex Citation:

@inproceedings{chen2023svqnet,

title={SVQNet: Sparse Voxel-Adjacent Query Network for 4D Spatio-Temporal LiDAR Semantic Segmentation},

author={Chen, Xuechao and Xu, Shuangjie and Zou, Xiaoyi and Cao, Tongyi and Yeung, Dit-Yan and Fang, Lu},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={8569--8578},

year={2023}

}

H. Ying, B. Jiang, J. Zhang, D. Xu, T. Yu, Q. Dai, and L. Fang,

Proc. of IEEE International Conference on Computer Vision (ICCV), 2023.

This paper proposes a method for fast scene radiance field reconstruction with strong novel view synthesis perfor- mance and convenient scene editing functionality. The key idea is to fully utilize semantic parsing and primitive extrac- tion for constraining and accelerating the radiance field re- construction process. To fulfill this goal, a primitive aware hybrid rendering strategy was proposed to enjoy the best of both volumetric and primitive rendering. We further con- tribute a reconstruction pipeline conducts primitive parsing and radiance field learning iteratively for each input frame which successfully fuse semantic, primitive and radiance in- formation into a single framework. Extensive evaluations demonstrate the fast reconstruction ability, high rendering quality and convenient editing functionality of our method.

Latex Bibtex Citation:

@InProceedings{Ying_2023_ICCV, author = {Ying, Haiyang and Jiang, Baowei and Zhang, Jinzhi and Xu, Di and Yu, Tao and Dai, Qionghai and Fang, Lu}, title = {PARF: Primitive-Aware Radiance Fusion for Indoor Scene Novel View Synthesis}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, month = {October}, year = {2023}, pages = {17706-17716} }

H. Lin, Z. Chen, J. Zhang, B. Bai, Y. Wang, R. Huang, and L. Fang,

Proc. of IEEE International Conference on Computer Vision (ICCV), 2023.

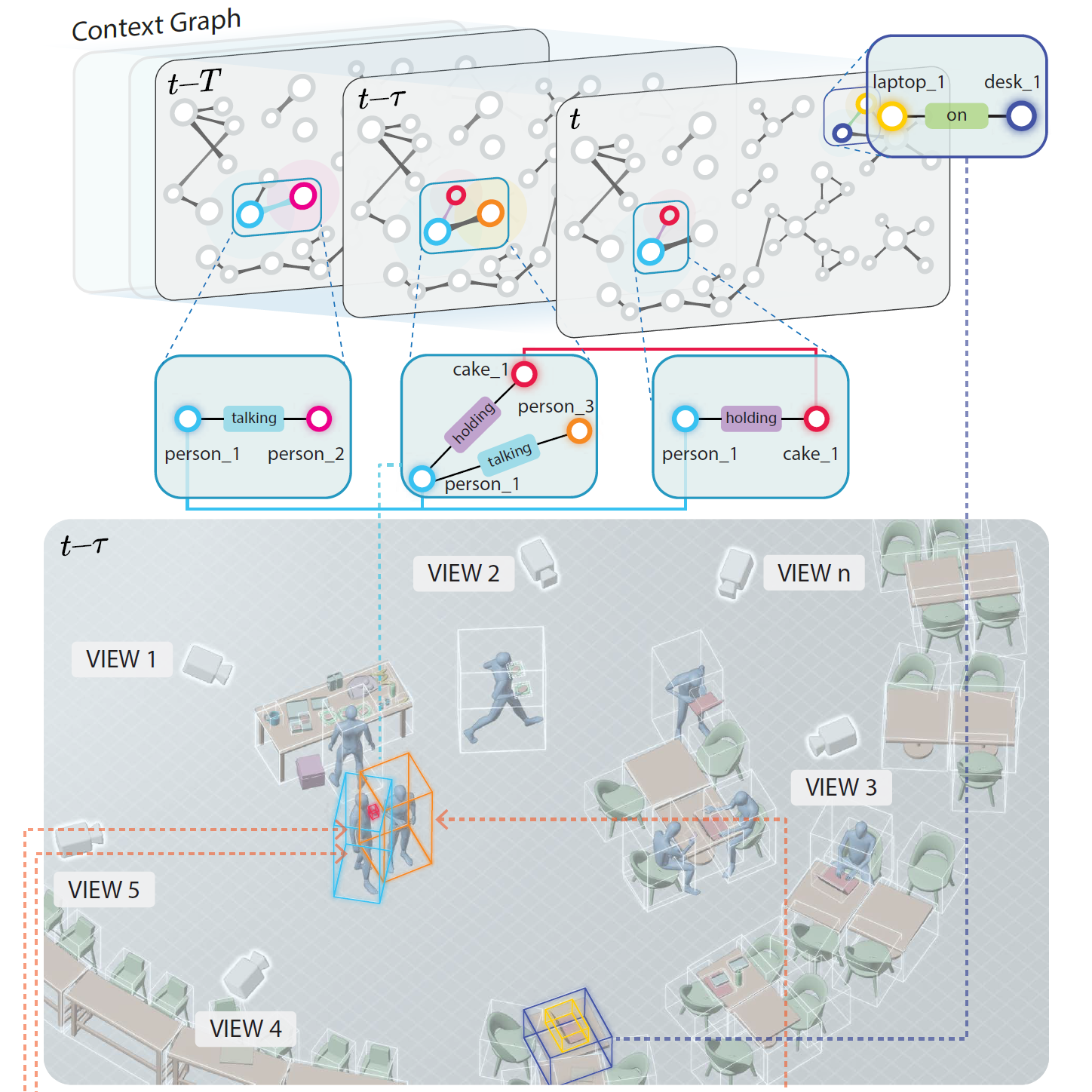

Understanding 4D scene context in real world has become urgently critical for deploying sophisticated AI systems. In this paper, we propose a brand new scene understanding paradigm called ''Context Graph Generation (CGG)'', aiming at abstracting holistic semantic information in the complicated 4D world. The CGG task capitalizes on the calibrated multiview videos of a dynamic scene, and targets at recovering semantic information (coordination, trajectories and relationships) of the presented objects in the form of spatio-temporal context graph in 4D space. We also present a benchmark 4D video dataset "RealGraph'', the first dataset tailored for the proposed CGG task. The raw data of RealGraph is composed of calibrated and synchronized multiview videos. We exclusively provide manual annotations including object 2D&3D bounding boxes, category labels and semantic relationships. We also make sure the annotated ID for every single object is temporally and spatially consistent. We propose the first CGG baseline algorithm, Multiview-based Context Graph Generation Network (MCGNet), to empirically investigate the legitimacy of CGG task on RealGraph dataset. We nevertheless reveal the great challenges behind this task and encourage the community to explore beyond our solution.

Latex Bibtex Citation:

C. Wei, Y. Wang, B. Bai, K. Ni, D. Brady, and L. Fang,

Proceedings of the 40th International Conference on Machine Learning (ICML).

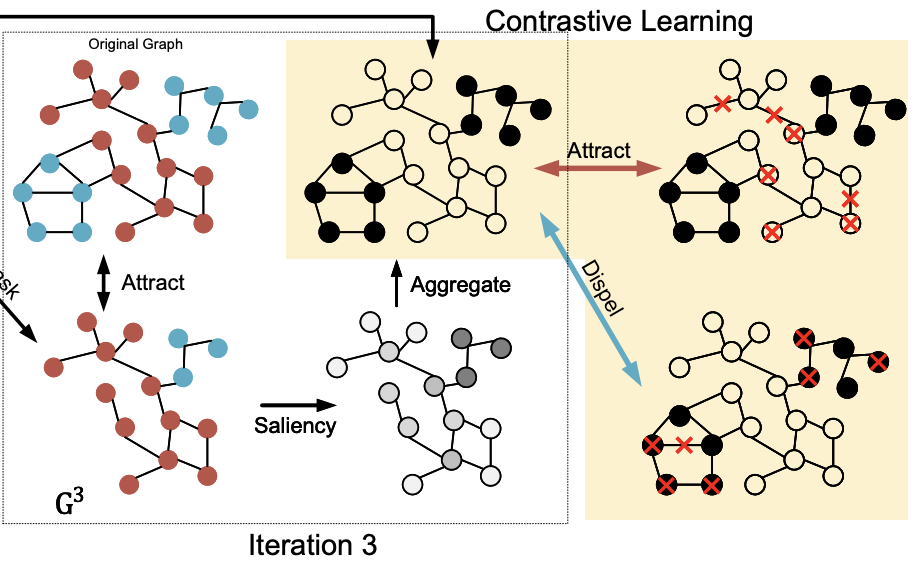

Graph augmentation plays a crucial role in achieving good generalization for contrastive graph selfsupervised learning. However, mainstream Graph Contrastive Learning (GCL) often favors random graph augmentations, by relying on random node dropout or edge perturbation on graphs. Random augmentations may inevitably lead to semantic information corruption during the training, and force the network to mistakenly focus on semantically irrelevant environmental background structures. To address these limitations and to improve generalization, we propose a novel selfsupervised learning framework for GCL, which can adaptively screen the semantic-related substructure in graphs by capitalizing on the proposed gradient-based Graph Contrastive Saliency (GCS). The goal is to identify the most semantically discriminative structures of a graph via contrastive learning, such that we can generate semantically meaningful augmentations by leveraging on saliency. Empirical evidence on 16 benchmark datasets demonstrates the exclusive merits of the GCS-based framework. We also provide rigorous theoretical justification for GCS’s robustness properties.

Latex Bibtex Citation:

@article{wei2023boosting,

title={Boosting Graph Contrastive Learning via Graph Contrastive Saliency},

author={Wei, Chunyu and Wang, Yu and Bai, Bing and Ni, Kai and Brady, David J and Fang, Lu},

year={2023}

}

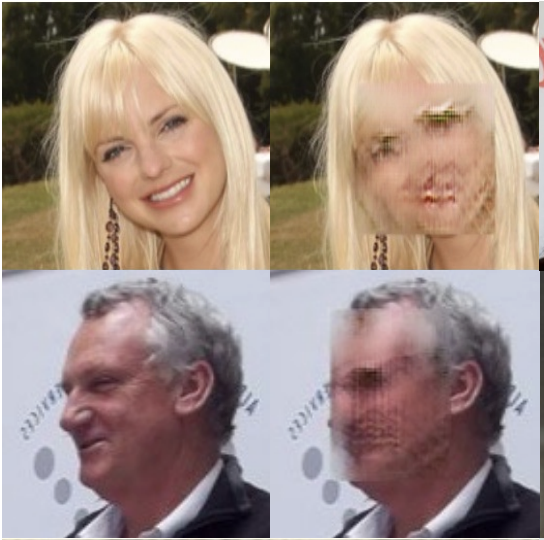

B. Jiang, B. Bai, H. Lin, Y. Wang, Y. Guo, and L. Fang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2023.

Nowadays, privacy issue has become a top priority when training AI algorithms. Machine learning algorithms are expected to benefit our daily life, while personal information must also be carefully protected from exposure. Facial information is particularly sensitive in this regard. Multiple datasets containing facial information have been taken offline, and the community is actively seeking solutions to remedy the privacy issues. Existing methods for privacy preservation can be divided into blur-based and face replacement-based methods. Owing to the advantages of review convenience and good accessibility, blur-based based methods have become a dominant choice in practice. However, blur-based methods would inevitably introduce training artifacts harmful to the performance of downstream tasks. In this paper, we propose a novel De-artifact Blurring (DartBlur) privacy-preserving method, which capitalizes on a DNN architecture to generate blurred faces. DartBlur can effectively hide facial privacy information while detection artifacts are simultaneously suppressed. We have designed four training objectives that particularly aim to improve review convenience and maximize detection artifact suppression. We associate the algorithm with an adversarial training strategy with a second-order optimization pipeline. Experimental results demonstrate that DartBlur outperforms the existing face-replacement method from both perspectives of review convenience and accessibility, and also shows an exclusive advantage in suppressing the training artifact compared to traditional blur-based methods.

Latex Bibtex Citation:

@inproceedings{jiang2023dartblur,

title={DartBlur: Privacy Preservation With Detection Artifact Suppression},

author={Jiang, Baowei and Bai, Bing and Lin, Haozhe and Wang, Yu and Guo, Yuchen and Fang, Lu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={16479--16488},

year={2023}

}

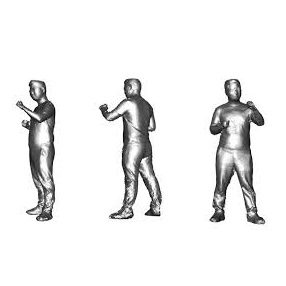

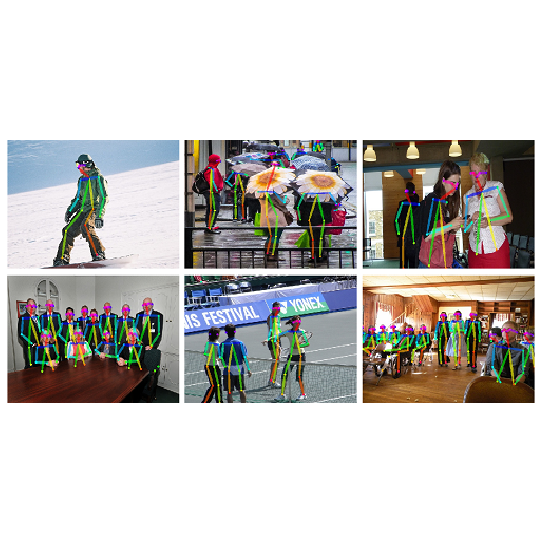

H. Wen, J. Huang, H. Cui, H. Lin, Y. Lai, L. Fang, and K. Li,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2023.

Image-based multi-person reconstruction in wide-field large scenes is critical for crowd analysis and security alert. However, existing methods cannot deal with large scenes containing hundreds of people, which encounter the challenges of large number of people, large variations in human scale, and complex spatial distribution. In this paper, we propose Crowd3D, the first framework to reconstruct the 3D poses, shapes and locations of hundreds of people with global consistency from a single large-scene image. The core of our approach is to convert the problem of complex crowd localization into pixel localization with the help of our newly defined concept, Human-scene Virtual Interaction Point (HVIP). To reconstruct the crowd with global consistency, we propose a progressive reconstruction network based on HVIP by pre-estimating a scene-level camera and a ground plane. To deal with a large number of persons and various human sizes, we also design an adaptive human-centric cropping scheme. Besides, we contribute a benchmark dataset, LargeCrowd, for crowd reconstruction in a large scene. Experimental results demonstrate the effectiveness of the proposed method.

Latex Bibtex Citation:

@inproceedings{wen2023crowd3d,

title={Crowd3D: Towards hundreds of people reconstruction from a single image},

author={Wen, Hao and Huang, Jing and Cui, Huili and Lin, Haozhe and Lai, Yu-Kun and Fang, Lu and Li, Kun},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={8937--8946},

year={2023}

} 2022

J. Wu, Y. Guo, C. Deng, A. Zhang, H. Qiao, Z. Lu, J. Xie, L. Fang, and Q. Dai,

Nature (2022).

Planar digital image sensors facilitate broad applications in a wide range of areas and the number of pixels has scaled up rapidly in recent years2,6. However, the practical performance of imaging systems is fundamentally limited by spatially nonuniform optical aberrations originating from imperfect lenses or environmental disturbances. Here we propose an integrated scanning light-field imaging sensor, termed a meta-imaging sensor, to achieve high-speed aberration-corrected three-dimensional photography for universal applications without additional hardware modifications. Instead of directly detecting a two-dimensional intensity projection, the meta-imaging sensor captures extra-fine four-dimensional light-field distributions through a vibrating coded microlens array, enabling flexible and precise synthesis of complex-field-modulated images in post-processing. Using the sensor, we achieve high-performance photography up to a gigapixel with a single spherical lens without a data prior, leading to orders-of-magnitude reductions in system capacity and costs for optical imaging. Even in the presence of dynamic atmosphere turbulence, the meta-imaging sensor enables multisite aberration correction across 1,000 arcseconds on an 80-centimetre ground-based telescope without reducing the acquisition speed, paving the way for high-resolution synoptic sky surveys. Moreover, high-density accurate depth maps can be retrieved simultaneously, facilitating diverse applications from autonomous driving to industrial inspections.

Latex Bibtex Citation:

Z. Xu, X. Yuan, T. Zhou and L. Fang,

Light: Science & Applications, volume 11, Article number: 255 (2022).

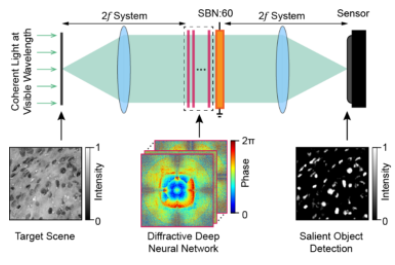

Endowed with the superior computing speed and energy efficiency, optical neural networks (ONNs) have attracted ever-growing attention in recent years. Existing optical computing architectures are mainly single-channel due to the lack of advanced optical connection and interaction operators, solving simple tasks such as hand-written digit classification, saliency detection, etc. The limited computing capacity and scalability of single-channel ONNs restrict the optical implementation of advanced machine vision. Herein, we develop Monet: a multichannel optical neural network architecture for a universal multiple-input multiple-channel optical computing based on a novel projection-interference-prediction framework where the inter- and intra- channel connections are mapped to optical interference and diffraction. In our Monet, optical interference patterns are generated by projecting and interfering the multichannel inputs in a shared domain. These patterns encoding the correspondences together with feature embeddings are iteratively produced through the projection-interference process to predict the final output optically. For the first time, Monet validates that multichannel processing properties can be optically implemented with high-efficiency, enabling real-world intelligent multichannel-processing tasks solved via optical computing, including 3D/motion detections. Extensive experiments on different scenarios demonstrate the effectiveness of Monet in handling advanced machine vision tasks with comparative accuracy as the electronic counterparts yet achieving a ten-fold improvement in computing efficiency. For intelligent computing, the trends of dealing with real-world advanced tasks are irreversible. Breaking the capacity and scalability limitations of single-channel ONN and further exploring the multichannel processing potential of wave optics, we anticipate that the proposed technique will accelerate the development of more powerful optical AI as critical support for modern advanced machine vision.

Latex Bibtex Citation:

@article{xu2022multichannel,

title={A multichannel optical computing architecture for advanced machine vision},

author={Xu, Zhihao and Yuan, Xiaoyun and Zhou, Tiankuang and Fang, Lu},

journal={Light: Science \& Applications},

volume={11},

number={1},

pages={1--13},

year={2022},

publisher={Nature Publishing Group}

}

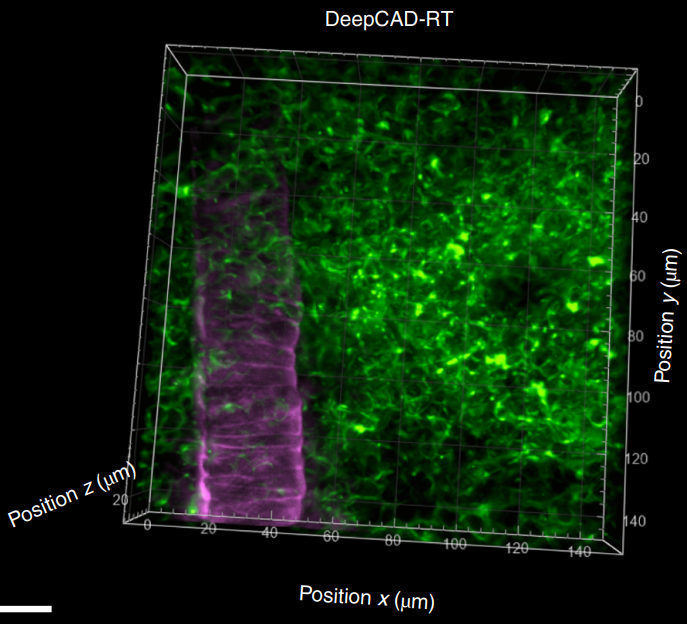

X. Li, Y. Li, Y. Zhou, J. Wu, Z. Zhao, J. Fan, F. Deng, Z. Wu, G. Xiao, J. He, Y. Zhang, G. Zhang, X. Hu, X. Chen, Y. Zhang, H. Qiao, H. Xie, Y. Li, H. Wang, L. Fang, and Q. Dai,

Nature Biotechnology, 2022: 1-11.

A fundamental challenge in fluorescence microscopy is the photon shot noise arising from the inevitable stochasticity of

photon detection. Noise increases measurement uncertainty and limits imaging resolution, speed and sensitivity. To achieve

high-sensitivity fluorescence imaging beyond the shot-noise limit, we present DeepCAD-RT, a self-supervised deep learning method for real-time noise suppression. Based on our previous framework DeepCAD, we reduced the number of network

parameters by 94%, memory consumption by 27-fold and processing time by a factor of 20, allowing real-time processing on

a two-photon microscope. A high imaging signal-to-noise ratio can be acquired with tenfold fewer photons than in standard

imaging approaches. We demonstrate the utility of DeepCAD-RT in a series of photon-limited experiments, including in vivo

calcium imaging of mice, zebrafish larva and fruit flies, recording of three-dimensional (3D) migration of neutrophils after acute

brain injury and imaging of 3D dynamics of cortical ATP release. DeepCAD-RT will facilitate the morphological and functional

interrogation of biological dynamics with a minimal photon budget.

Latex Bibtex Citation:

@article{li2022real,

title={Real-time denoising enables high-sensitivity fluorescence time-lapse imaging beyond the shot-noise limit},

author={Li, Xinyang and Li, Yixin and Zhou, Yiliang and Wu, Jiamin and Zhao, Zhifeng and Fan, Jiaqi and Deng, Fei and Wu, Zhaofa and Xiao, Guihua and He, Jing and others},

journal={Nature Biotechnology},

pages={1--11},

year={2022},

publisher={Nature Publishing Group}

}

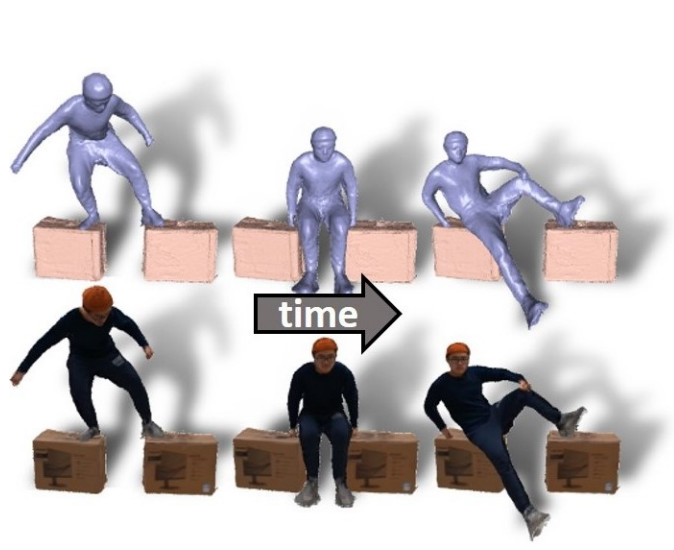

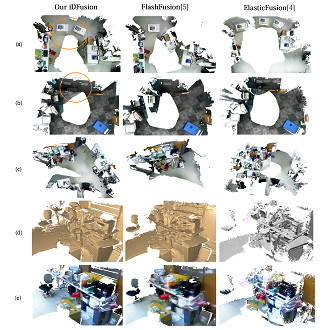

Z. Su, L. Xu, D. Zhong, Z. Li, F. Deng, S. Quan, L. Fang,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), Oct. 2022.

High-quality 4D reconstruction of human performance with complex interactions to various objects is essential in real-world scenarios, which enables numerous immersive VR/AR applications. However, recent advances still fail to provide reliable performance reconstruction, suffering from challenging interaction patterns and severe occlusions, especially for the monocular setting. To fill this gap, in this paper, we propose RobustFusion, a robust volumetric performance reconstruction system for human-object interaction scenarios using only a single RGBD sensor, which combines various data-driven visual and interaction cues to handle the complex interaction patterns and severe occlusions. We propose a semantic-aware scene decoupling scheme to model the occlusions explicitly, with a segmentation refinement and robust object tracking to prevent disentanglement uncertainty and maintain temporal consistency. We further introduce a robust performance capture scheme with the aid of various data-driven cues, which not only enables re-initialization ability, but also models the complex human-object interaction patterns in a data-driven manner. To this end, we introduce a spatial relation prior to prevent implausible intersections, as well as data-driven interaction cues to maintain natural motions, especially for those regions under severe human-object occlusions. We also adopt an adaptive fusion scheme for temporally coherent human-object reconstruction with occlusion analysis and human parsing cue. Extensive experiments demonstrate the effectiveness of our approach to achieve high-quality 4D human performance reconstruction under complex human-object interactions whilst still maintaining the lightweight monocular setting.

Latex Bibtex Citation:

@ARTICLE{9925090,

author={Su, Zhuo and Xu, Lan and Zhong, Dawei and Li, Zhong and Deng, Fan and Quan, Shuxue and Fang, Lu},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={RobustFusion: Robust Volumetric Performance Reconstruction Under Human-Object Interactions From Monocular RGBD Stream},

year={2022},

volume={},

number={},

pages={1-17},

doi={10.1109/TPAMI.2022.3215746}}

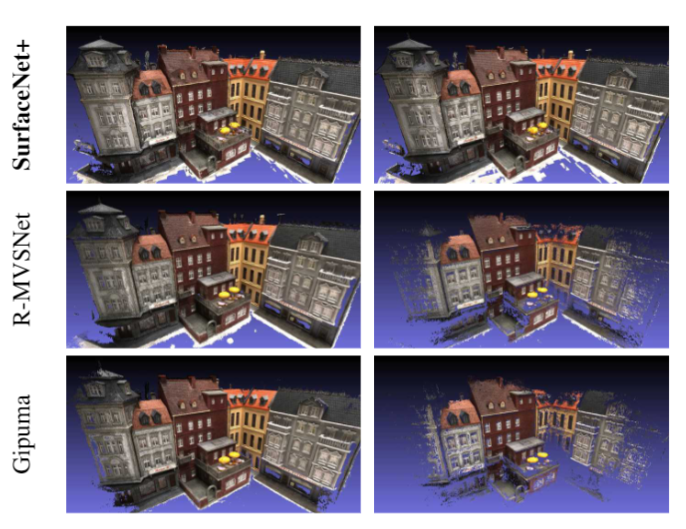

J. Zhang, R. Tang, Z. Cao, J. Xiao, R. Huang, and L. Fang,

Proc. of Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS).

Self-supervised multi-view stereopsis (MVS) attracts increasing attention for learning dense surface predictions from only a set of images without onerous ground-truth 3D training data for supervision. However, existing methods highly rely on the local photometric consistency, which fail to identify accurately dense correspondence in broad textureless or reflectant areas. In this paper, we show that geometric proximity such as surface connectedness and occlusion boundaries implicitly inferred from images could serve as reliable guidance for pixel-wise multi-view correspondences. With this insight, we present a novel elastic part representation, which encodes physically-connected part segmentations with elastically-varying scales, shapes and boundaries. Meanwhile, a self-supervised MVS framework namely ElasticMVS is proposed to learn the representation and estimate per-view depth following a part-aware propagation and evaluation scheme. Specifically, the pixel-wise part representation is trained by a contrastive learning-based strategy, which increases the representation compactness in geometrically concentrated areas and contrasts otherwise. ElasticMVS iteratively optimizes a part-level consistency loss and a surface smoothness loss, based on a set of depth hypotheses propagated from the geometrically concentrated parts. Extensive evaluations convey the superiority of ElasticMVS in the reconstruction completeness and accuracy, as well as the efficiency and scalability. Particularly, for the challenging large-scale reconstruction benchmark, ElasticMVS demonstrates significant performance gain over both the supervised and self-supervised approaches.

Latex Bibtex Citation:

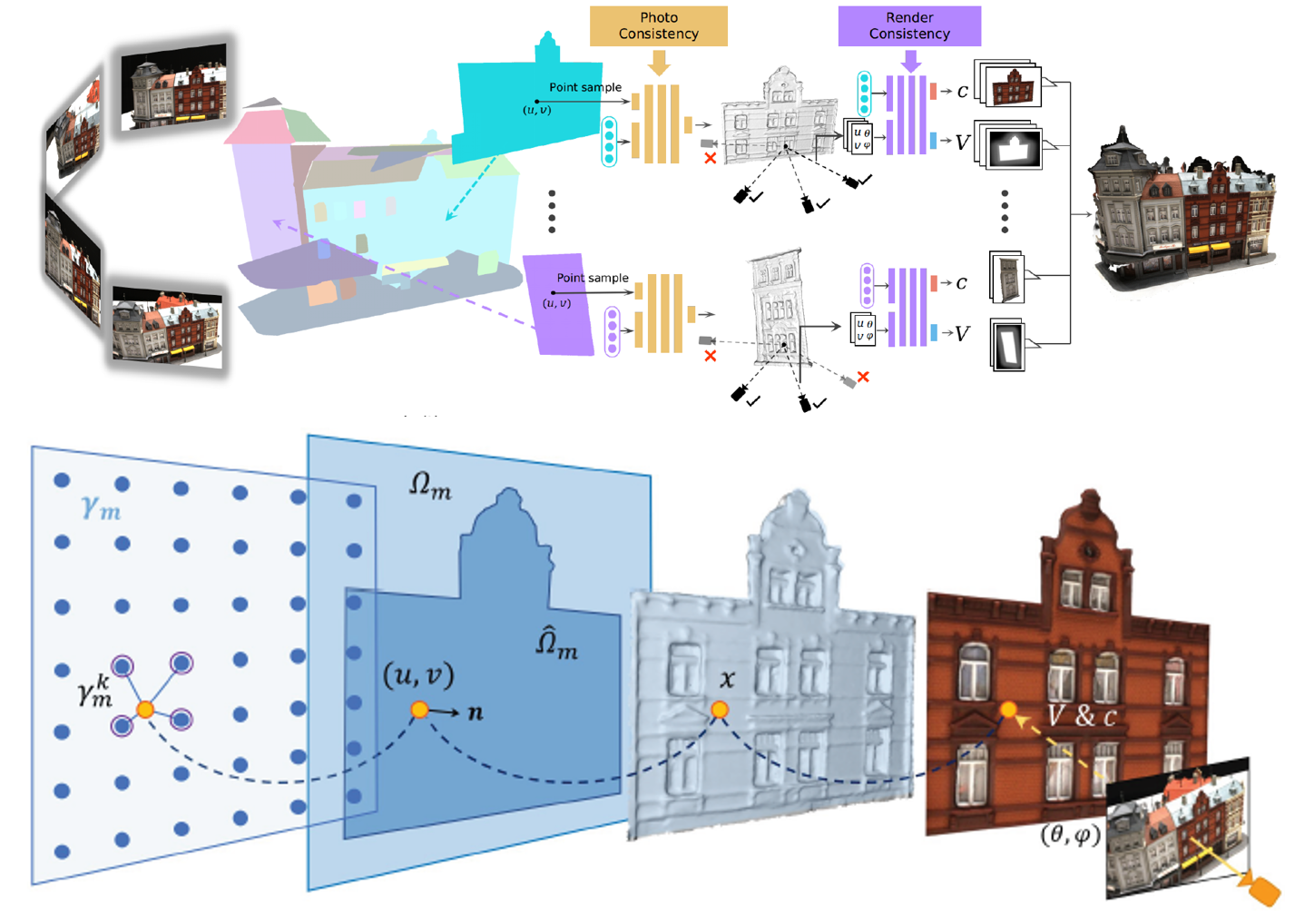

H. Ying, J. Zhang, Y. Chen, Z. Cao, J. Xiao, R. Huang, L. Fang,

Proc. of The 30th ACM International Conference on Multimedia (MM' 22).

Multi-view stereopsis (MVS) recovers 3D surfaces by finding dense photo-consistent correspondences from densely sampled images. In this paper, we tackle the challenging MVS task from sparsely sampled views (up to an order of magnitude fewer images), which is more practical and cost-efficient in applications. The major challenge comes from the significant correspondence ambiguity introduced by the severe occlusions and the highly skewed patches. On the other hand, such ambiguity can be resolved by incorporating geometric cues from the global structure. In light of this, we propose ParseMVS, boosting sparse MVS by learning the Primitive-AwaRe Surface rEpresentation. In particular, on top of being aware of global structure, our novel representation further allows for the preservation of fine details including geometry, texture, and visibility. More specifically, the whole scene is parsed into multiple geometric primitives. On each of them, the geometry is defined as the displacement along the primitives’ normal directions, together with the texture and visibility along each view direction. An unsupervised neural network is trained to learn these factors by progressively increasing the photo-consistency and render-consistency among all input images. Since the surface properties are changed locally in the 2D space of each primitive, ParseMVS can preserve global primitive structures while optimizing local details, handling the ‘incompleteness’ and the ‘inaccuracy’ problems. We experimentally demonstrate that ParseMVS constantly outperforms the state-ofthe-art surface reconstruction method in both completeness and the overall score under varying sampling sparsity, especially under the extreme sparse-MVS settings. Beyond that, ParseMVS also shows great potential in compression, robustness, and efficiency.

Latex Bibtex Citation:

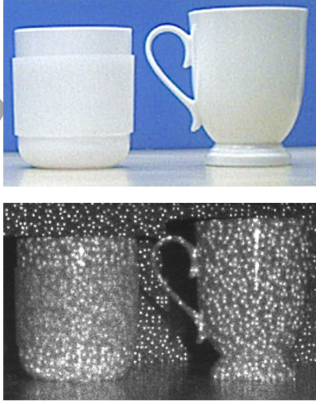

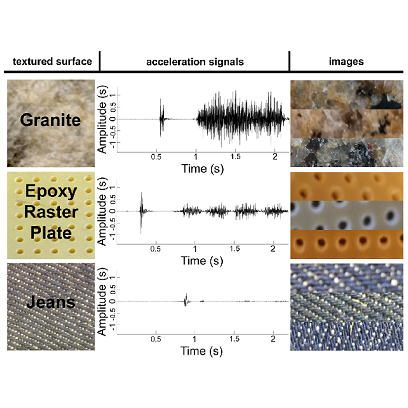

S. Mao, M. Ji, B. Wang, Q. Dai, L. Fang,

IEEE Journal of Selected Topics in Signal Processing, 2022.

Accurately perceiving object surface material is critical for scene understanding and robotic manipulation. However, it is ill-posed because the imaging process entangles material, lighting, and geometry in a complex way. Appearance-based methods cannot disentangle lighting and geometry variance and have difficulties in textureless regions. We propose a novel multimodal fusion method for surface material perception using the depth camera shooting structured laser dots. The captured active infrared image was decomposed into diffusive and dot modalities and their connection with different material optical properties (i.e. reflection and scattering) were revealed separately. The geometry modality, which helps to disentangle material properties from geometry variations, is derived from the rendering equation and calculated based on the depth image obtained from the structured light camera. Further, together with the texture feature learned from the gray modality, a multimodal learning method is proposed for material perception. Experiments on synthesized and captured datasets validate the orthogonality of learned features. The final fusion method achieves 92.5% material accuracy, superior to state-of-the-art appearancebased methods (78.4%).

Latex Bibtex Citation:

@article{mao2022surface,

title={Surface Material Perception Through Multimodal Learning},

author={Mao, Shi and Ji, Mengqi and Wang, Bin and Dai, Qionghai and Fang, Lu},

journal={IEEE Journal of Selected Topics in Signal Processing},

year={2022},

publisher={IEEE}

}

We propose INS-Conv, an INcremental Sparse Convolutional network which enables online accurate 3D semantic and instance segmentation. Benefiting from the incremental nature of RGB-D reconstruction, we only need to update the residuals between the reconstructed scenes of consecutive frames, which are usually sparse. For layer design, we define novel residual propagation rules for sparse convolution operations, achieving close approximation to standard sparse convolution. For network architecture, an uncertainty term is proposed to adaptively select which residual to update, further improving the inference accuracy and efficiency. Based on INS-Conv, an online joint 3D semantic and instance segmentation pipeline is proposed, reaching an inference speed of 15 FPS on GPU and 10 FPS on CPU. Experiments on ScanNetv2 and SceneNN datasets show that the accuracy of our method surpasses previous online methods by a large margin, and is on par with state-of-the-art offline methods. A live demo on portable devices further shows the superior performance of INS-Conv.

Latex Bibtex Citation:

@inproceedings{liu2022ins,

title={INS-Conv: Incremental Sparse Convolution for Online 3D Segmentation},

author={Liu, Leyao and Zheng, Tian and Lin, Yun-Jou and Ni, Kai and Fang, Lu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={18975--18984},

year={2022}

}

L. Han, D. Zhong, L. Li, K. Zheng and L. Fang,

IEEE Trans. on Image Processing (TIP), Feb. 2022.

Scene Representation Networks (SRN) have been proven as a powerful tool for novel view synthesis in recent works. They learn a mapping function from the world coordinates of spatial

points to radiance color and the scene’s density using a fully connected network. However, scene texture contains complex high-frequency details in practice that is hard to be memorized by

a network with limited parameters, leading to disturbing blurry effects when rendering novel views. In this paper, we propose to learn ‘residual color’ instead of ‘radiance color’ for novel view synthesis, i.e., the residuals between surface color and reference color. Here the reference color is calculated based on spatial color priors, which are extracted from input view observations. The beauty of such a strategy lies in that the residuals between radiance color and reference are close to zero for most spatial points thus are easier to learn. A novel view synthesis system that

learns the residual color using SRN is presented in this paper. Experiments on public datasets demonstrate that the proposed method achieves competitive performance in preserving highresolution details, leading to visually more pleasant results than the state of the arts.

Latex Bibtex Citation:

@article{Han2022,

author = {Han, Lei and Zhong, Dawei and Li, Lin and Zheng, Kai and and Fang, Lu},

title = {Learning Residual Color for Novel View Synthesis},

journal = {IEEE Transactions on Image Processing (TIP)},

year = {2022},

type = {Journal Article}

}

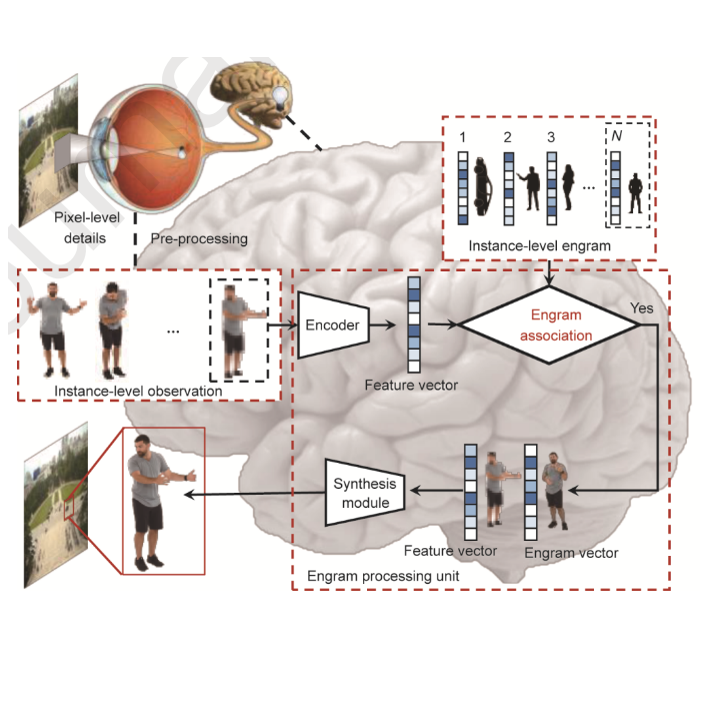

L. Fang, M. Ji, X. Yuan, J. He, J. Zhang, Y. Zhu, T. Zheng, L. Liu, B. Wang and Q. Dai

Engineering, Feb. 2022.

Sensing and understanding large-scale dynamic scenes require a high-performance imaging system. Conventional imaging systems pursue higher capability by simply increasing the pixel resolution via stitching cameras at the expense of a bulky system. Moreover, they strictly follow the feedforward pathway: that is, their pixel-level sensing is independent of semantic understanding. Differently, a human visual system owns superiority with both feedforward and feedback pathways: The feedforward pathway extracts object representation (referred to as memory engram) from visual inputs, while, in the feedback pathway, the associated engram is reactivated to generate hypotheses about an object. Inspired by this, we propose a dual-pathway imaging mechanism, called engram-driven videography. We start by abstracting the holistic representation of the scene, which is associated bidirectionally with local details, driven by an instance-level engram. Technically, the entire system works by alternating between the excitation–inhibition and association states. In the former state, pixel-level details become dynamically consolidated or inhibited to strengthen the instance-level engram. In the association state, the spatially and temporally consistent content becomes synthesized driven by its engram for outstanding videography quality of future scenes. The association state serves as the imaging of future scenes by synthesizing spatially and temporally consistent content driven by its engram. Results of extensive simulations and experiments demonstrate that the proposed system revolutionizes the conventional videography paradigm and shows great potential for videography of large-scale scenes with multi-objects.

Latex Bibtex Citation:

@article{fang2022engram,

title={Engram-Driven Videography},

author={Fang, Lu and Ji, Mengqi and Yuan, Xiaoyun and He, Jing and Zhang, Jianing and Zhu, Yinheng and Zheng, Tian and Liu, Leyao and Wang, Bin and Dai, Qionghai},

journal={Engineering},

year={2022},

publisher={Elsevier}

}

2021

T. Zhou, X. Lin, J. Wu, Y. Chen, H. Xie, Y. Li, J. Fan, H. Wu, L. Fang and Q. Dai,

Nature Photonics, 2021: 1-7. (cover article)

There is an ever-growing demand for artificial intelligence. Optical processors, which compute with photons instead of electrons, can fundamentally accelerate the development of artificial intelligence by offering substantially improved computing performance. There has been long-term interest in optically constructing the most widely used artificial-intelligence architecture, that is, artificial neural networks, to achieve brain-inspired information processing at the speed of light. However, owing to restrictions in design flexibility and the accumulation of system errors, existing processor architectures are not reconfigurable and have limited model complexity and experimental performance. Here, we propose the reconfigurable diffractive processing unit, an optoelectronic fused computing architecture based on the diffraction of light, which can support different neural networks and achieve a high model complexity with millions of neurons. Along with the developed adaptive training approach to circumvent system errors, we achieved excellent experimental accuracies for high-speed image and video recognition over benchmark datasets and a computing performance superior to that of cutting-edge electronic computing platforms.

Latex Bibtex Citation:

@article{zhou2021large,

title={Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit},

author={Zhou, Tiankuang and Lin, Xing and Wu, Jiamin and Chen, Yitong and Xie, Hao and Li, Yipeng and Fan, Jingtao and Wu, Huaqiang and Fang, Lu and Dai, Qionghai},

journal={Nature Photonics},

pages={1--7},

year={2021},

publisher={Nature Publishing Group}

}

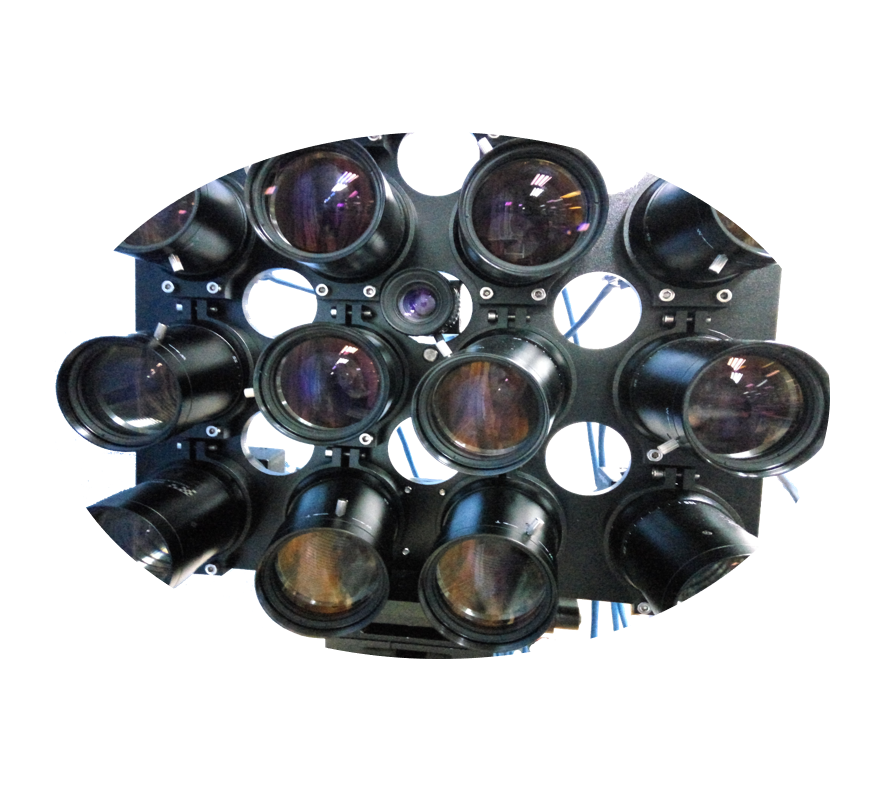

X. Yuan, M. Ji, J. Wu, D. Brady, Q. Dai and L. Fang,

Light: Science & Applications, volume 10, Article number: 37 (2021). (cover article)

Array cameras removed the optical limitations of a single camera and paved the way for high-performance imaging via the combination of micro-cameras and computation to fuse multiple aperture images. However, existing solutions use dense arrays of cameras that require laborious calibration and lack flexibility and practicality. Inspired by the cognition function principle of the human brain, we develop an unstructured array camera system that adopts a hierarchical modular design with multiscale hybrid cameras composing different modules. Intelligent computations are designed to collaboratively operate along both intra- and intermodule pathways. This system can adaptively allocate imagery resources to dramatically reduce the hardware cost and possesses unprecedented flexibility, robustness, and versatility. Large scenes of real-world data were acquired to perform human-centric studies for the assessment of human behaviours at the individual level and crowd behaviours at the population level requiring high-resolution long-term monitoring of dynamic wide-area scenes.

Latex Bibtex Citation:

@article{yuan2021modular,

title={A modular hierarchical array camera},

author={Yuan, Xiaoyun and Ji, Mengqi and Wu, Jiamin and Brady, David J and Dai, Qionghai and Fang, Lu},

journal={Light: Science \& Applications},

volume={10},

number={1},

pages={1--9},

year={2021},

publisher={Nature Publishing Group}

}

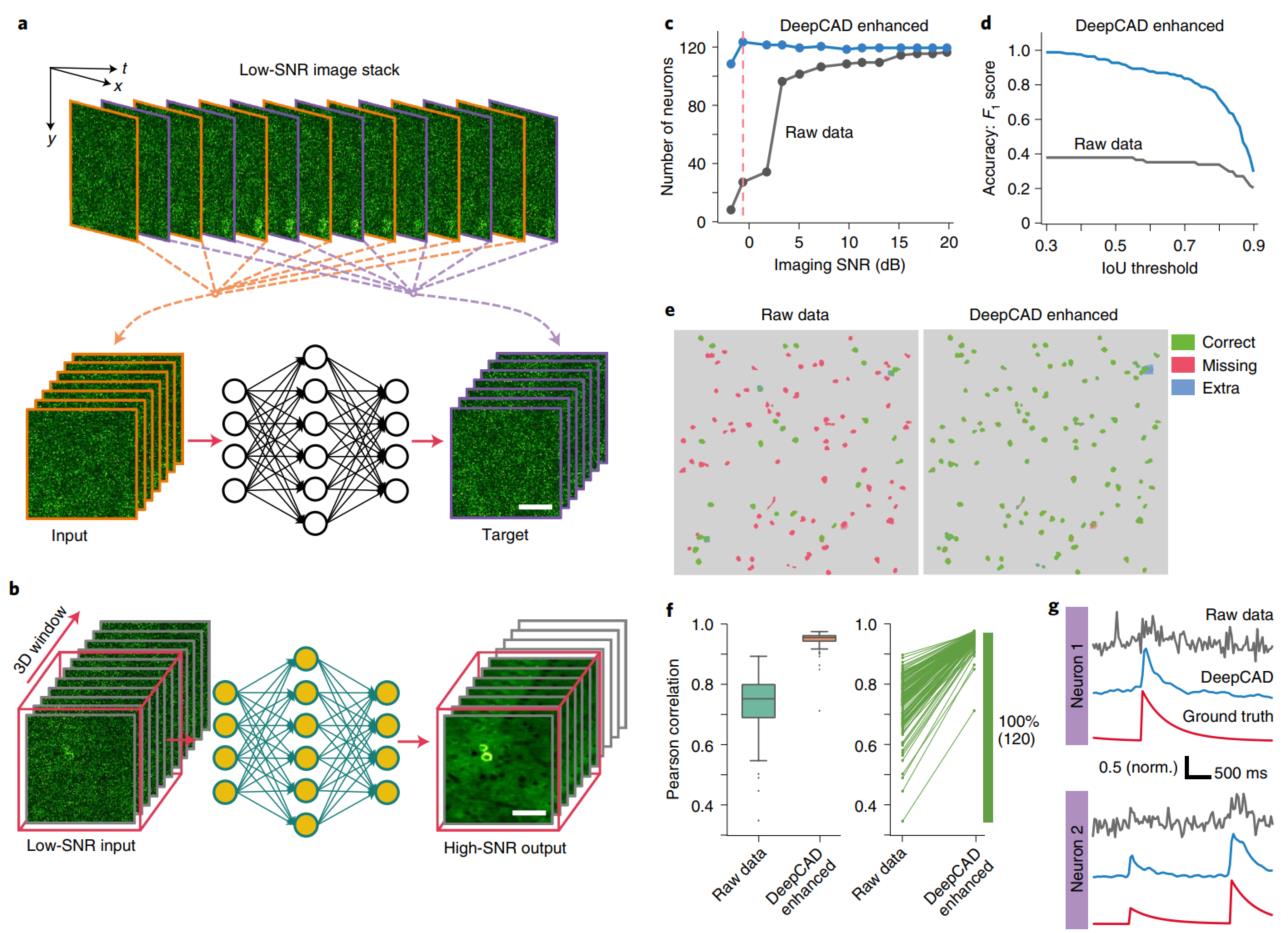

X. Li, G. Zhang, J. Wu, Y. Zhang, Z. Zhao, X. Lin, H. Qiao, H. Xie, H. Wang, L. Fang and Q. Dai,

Nature Methods, volume 18, pages 1395–1400 (2021).

Calcium imaging has transformed neuroscience research by providing a methodology for monitoring the activity of neural circuits with single-cell resolution. However, calcium imaging is inherently susceptible to detection noise, especially when imaging with high frame rate or under low excitation dosage. Here we developed DeepCAD, a self-supervised deep-learning method for spatiotemporal enhancement of calcium imaging data that does not require any high signal-to-noise ratio (SNR) observations. DeepCAD suppresses detection noise and improves the SNR more than tenfold, which reinforces the accuracy of neuron extraction and spike inference and facilitates the functional analysis of neural circuits.

Latex Bibtex Citation:

@article{li2021reinforcing,

title={Reinforcing neuron extraction and spike inference in calcium imaging using deep self-supervised denoising},

author={Li, Xinyang and Zhang, Guoxun and Wu, Jiamin and Zhang, Yuanlong and Zhao, Zhifeng and Lin, Xing and Qiao, Hui and Xie, Hao and Wang, Haoqian and Fang, Lu and others},

journal={Nature Methods},

volume={18},

number={11},

pages={1395--1400},

year={2021},

publisher={Nature Publishing Group}

}

J. Zhang, M. Ji, G. Wang, X. Zhiwei, S. Wang, L. Fang,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), Sep. 2021.

The recent success in supervised multi-view stereopsis (MVS) relies on the onerously collected real-world 3D data. While the latest differentiable rendering techniques enable unsupervised MVS, they are restricted to discretized (e.g., point cloud) or implicit geometric representation, suffering from either low integrity for a textureless region or less geometric details for complex scenes. In this paper, we propose SurRF, an unsupervised MVS pipeline by learning Surface Radiance Field, i.e., a radiance field defined on a continuous and explicit 2D surface. Our key insight is that, in a local region, the explicit surface can be gradually deformed from a continuous initialization along view-dependent camera rays by differentiable rendering. That enables us to define the radiance field only on a 2D deformable surface rather than in a dense volume of 3D space, leading to compact representation while maintaining complete shape and realistic texture for large-scale complex scenes. We experimentally demonstrate that the proposed SurRF produces competitive results over the-state-of-the-art on various real-world challenging scenes, without any 3D supervision. Moreover, SurRF shows great potential in owning the joint advantages of mesh (scene manipulation), continuous surface (high geometric resolution), and radiance field (realistic rendering).

Latex Bibtex Citation:

@ARTICLE{9555381,

author={Zhang, Jinzhi and Ji, Mengqi and Wang, Guangyu and Zhiwei, Xue and Wang, Shengjin and Fang, Lu},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={SurRF: Unsupervised Multi-view Stereopsis by Learning Surface Radiance Field},

year={2021},

volume={},

number={},

pages={1-1},

doi={10.1109/TPAMI.2021.3116695}}

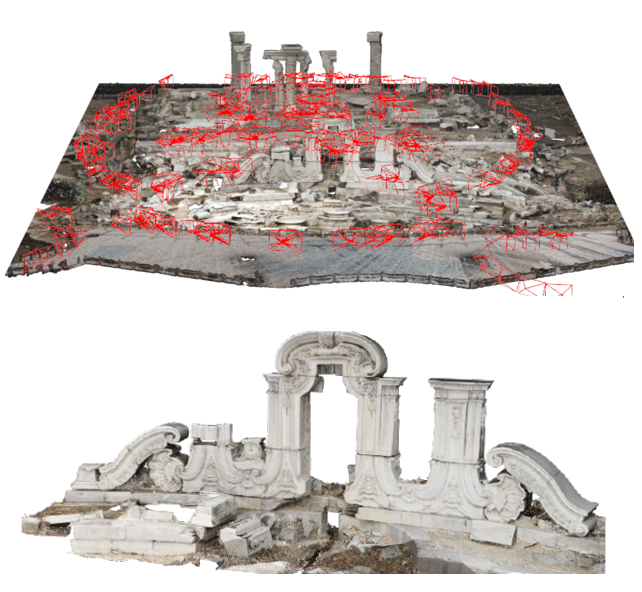

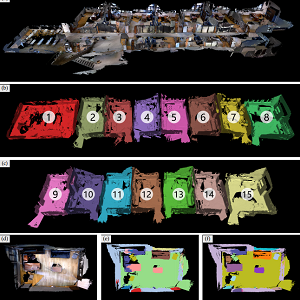

J. Zhang, J. Zhang, S. Mao, M. Ji, G. Wang, Z. Chen, T. Zhang, X. Yuan, Q. Dai and L. Fang,

IEEE Trans. on Pattern Analysis and Machine Intelligence (TPAMI), Sep. 2021.

Multiview stereopsis (MVS) methods, which can reconstruct both the 3D geometry and texture from multiple images, have been rapidly developed and extensively investigated from the feature engineering methods to the data-driven ones. However, there is no dataset containing both the 3D geometry of large-scale scenes and high-resolution observations of small details to benchmark the algorithms. To this end, we present GigaMVS, the first gigapixel-image-based 3D reconstruction benchmark for ultra-large-scale scenes. The gigapixel images, with both wide field-of-view and high-resolution details, can clearly observe both the Palace-scale scene structure and Relievo-scale local details. The ground-truth geometry is captured by the laser scanner, which covers ultra-large-scale scenes with an average area of 8667 m^2 and a maximum area of 32007 m^2. Due to the extremely large scale, complex occlusion, and gigapixel-level images, GigaMVS brings the problem to light that emerged from the poor effectiveness and efficiency of the existing MVS algorithms. We thoroughly investigate the state-of-the-art methods in terms of geometric and textural measurements, which point to the weakness of existing methods and promising opportunities for future works. We believe that GigaMVS can benefit the community of 3D reconstruction and support the development of novel algorithms balancing robustness, scalability, and accuracy.

Latex Bibtex Citation:

@ARTICLE{9547729,

author={Zhang, Jianing and Zhang, Jinzhi and Mao, Shi and Ji, Mengqi and Wang, Guangyu and Chen, Zequn and Zhang, Tian and Yuan, Xiaoyun and Dai, Qionghai and Fang, Lu},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={GigaMVS: A Benchmark for Ultra-large-scale Gigapixel-level 3D Reconstruction},

year={2021},

volume={},

number={},

pages={1-1},

doi={10.1109/TPAMI.2021.3115028}}

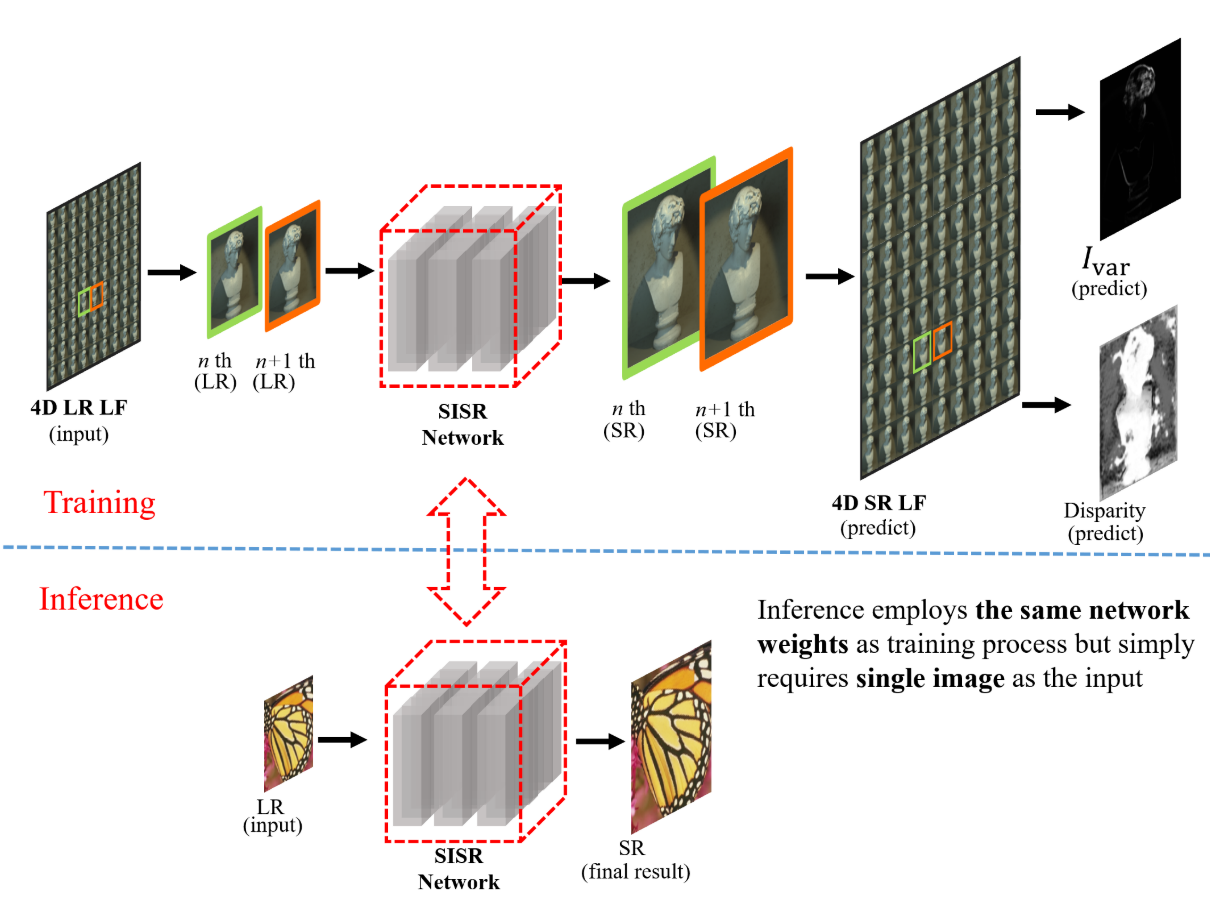

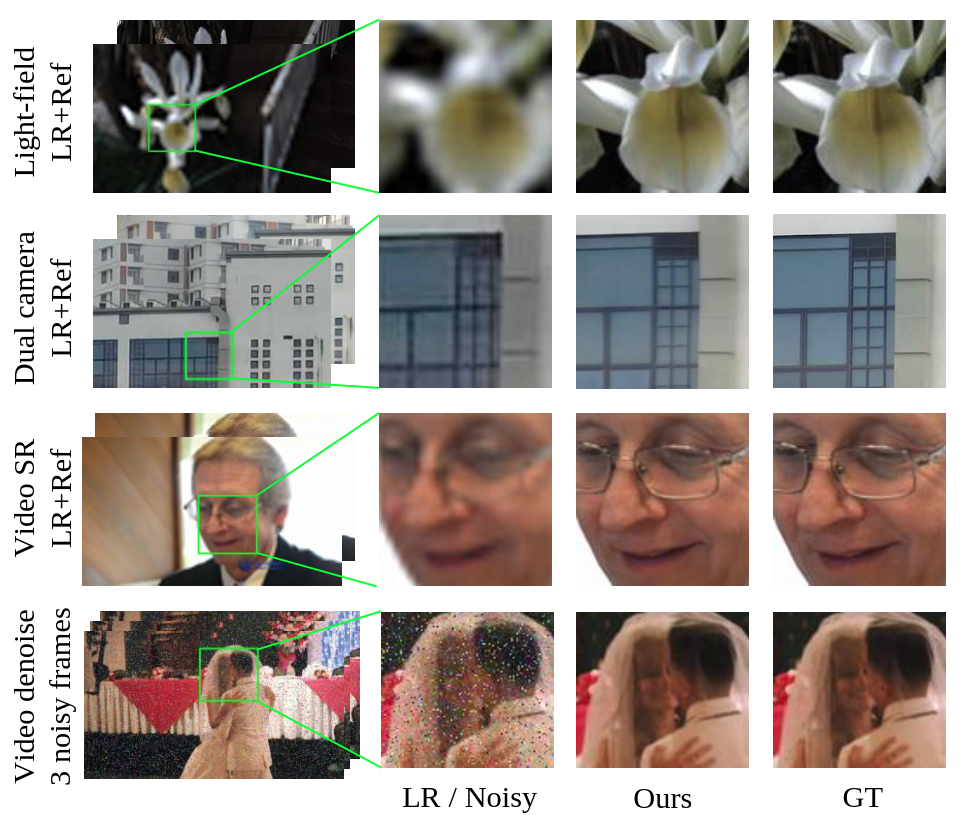

D. Jin, M. Ji, L. Xu, G. Wu, L. Wang, and L. Fang,

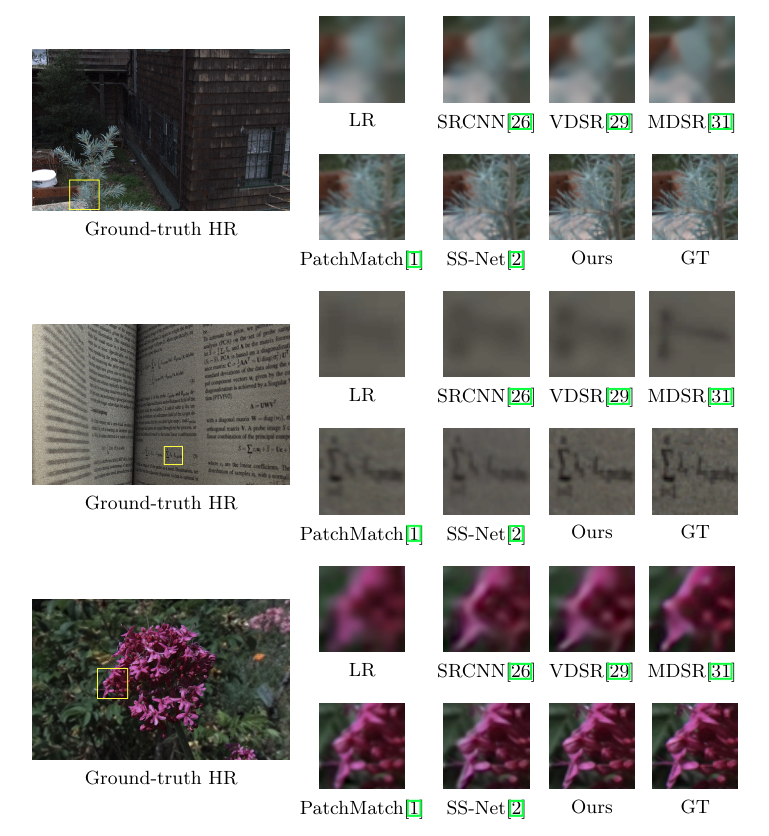

IEEE Trans. on Image Processing (TIP), Feb. 2021.

Learning-based single image super-resolution (SISR) aims to learn a versatile mapping from low resolution (LR) image to its high resolution (HR) version. The critical challenge is to bias the network training towards continuous and sharp edges. For the first time in this work, we propose an implicit boundary prior learnt from multi-view observations to significantly mitigate the challenge in SISR we outline. Specifically, the multi-image prior that encodes both disparity information and boundary structure of the scene supervise a SISR network for edge-preserving. For simplicity, in the training procedure of our framework, light field (LF) serves as an effective multi-image prior, and a hybrid loss function jointly considers the content, structure, variance as well as disparity information from 4D LF data. Consequently, for inference, such a general training scheme boosts the performance of various SISR networks, especially for the regions along edges. Extensive experiments on representative backbone SISR architectures constantly show the effectiveness of the proposed method, leading to around 0.6 dB gain without modifying the network architecture.

Latex Bibtex Citation:

@article{RN455,

author = {Jin, Dingjian and Ji, Mengqi and Xu, Lan and Wu, Gaochang and Wang, Liejun and Fang, Lu},

title = {Boosting Single Image Super-Resolution Learnt From Implicit Multi-Image Prior},

journal = {IEEE Transactions on Image Processing (TIP)},

volume = {30},

pages = {3240-3251},

ISSN = {1941-0042 (Electronic)

1057-7149 (Linking)},

DOI = {10.1109/TIP.2021.3059507},

url = {https://www.ncbi.nlm.nih.gov/pubmed/33621177},

year = {2021},

type = {Journal Article}

}

M. Hu, Y. Li, L. Fang and S. Wang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2021.

Learning pyramidal feature representations is crucial for recognizing object instances at different scales. Feature Pyramid Network (FPN) is the classic architecture to build a feature pyramid with high-level semantics throughout. However, intrinsic defects in feature extraction and fusion inhibit FPN from further aggregating more discriminative features. In this work, we propose Attention Aggregation based Feature Pyramid Network (A2 -FPN), to improve multi-scale feature learning through attention-guided feature aggregation. In feature extraction, it extracts discriminative features by collecting-distributing multi-level global context features, and mitigates the semantic information loss due to drastically reduced channels. In feature fusion, it aggregates complementary information from adjacent features to generate location-wise reassembly kernels for content-aware sampling, and employs channelwise reweighting to enhance the semantic consistency before element-wise addition. A2 -FPN shows consistent gains on different instance segmentation frameworks. By replacing FPN with A2 -FPN in Mask R-CNN, our model boosts the performance by 2.1% and 1.6% mask AP when using ResNet-50 and ResNet-101 as backbone, respectively. Moreover, A2 -FPN achieves an improvement of 2.0% and 1.4% mask AP when integrated into the strong baselines such as Cascade Mask R-CNN and Hybrid Task Cascade.

Latex Bibtex Citation:

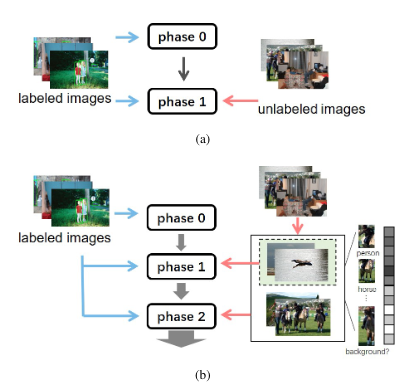

Z. Wang, Y. Li, Y. Guo, L. Fang and S. Wang,

Proc. of Computer Vision and Pattern Recognition (CVPR), 2021.